MariaDB, a popular relational database management system (RDBMS), is renowned for its reliability, flexibility, and open-source nature. With its extensive feature set, SQL compatibility, and ease of integration, businesses worldwide have made MariaDB a key component of their data architecture. However, for rapidly increasing data volumes and complexity, you might want to leverage more advanced analytics.

Databricks, a robust cloud-based platform built on Apache Spark, offers an impressive analytics solution. By migrating to Databricks, you can utilize its powerful analytics tools and distributed processing capabilities to extract vital insights from your data. This will improve your decision-making and help you gain a competitive advantage.

Let’s look into the details of migrating data from MariaDB to Databricks.

If you're eager to jump straight into the methods for migrating data from MariaDB to Databricks, feel free to click here to skip ahead to the step-by-step instructions.

MariaDB: An Overview

MariaDB, developed by the original creators of MySQL, is a popular open-source relational database known for its performance, scalability, and reliability. It is well-suited for a wide range of applications, including web development, data warehousing, and enterprise-level data management.

You can switch from MySQL to MariaDB without significantly altering your applications, as MariaDB is highly compatible with MySQL and supports most of its commands, interfaces, and APIs.

Here are a few key features of MariaDB:

- MariaDB supports a diverse array of storage engines, including high-performance options like Aria and InnoDB, to efficiently interact with other RDBMS data sources.

- MariaDB’s Sequence Storage Engine allows you to define numerical sequences that are either ascending or descending, given a starting value, ending value, and increment value. This generates ephemeral virtual tables as needed.

- MariaDB is compatible with numerous operating systems, including Windows, Linux, and macOS, and supports a wide range of programming languages such as Python, PHP, Java, C++, and Ruby. This broad compatibility enhances its flexibility for different development environments.

Databricks: An Overview

Databricks is a unified analytics platform that simplifies the building, deploying, sharing, and maintaining enterprise-grade big data solutions at scale. Its cloud-based platform provides a collaborative environment that supports the entire data lifecycle, from raw data ingestion to generating insights for better decision-making.

Databricks also combines the best data lakes and data warehouses, creating a powerful lakehouse architecture. This approach allows organizations to store large volumes of data cost-effectively while still enabling high-performance querying and analysis. The lakehouse architecture ensures that data is readily accessible and can be efficiently processed, supporting the needs of both data engineering and data analytics teams.

Here are a few key features of Databricks:

- Databricks offers a collaborative workspace where teams can share notes, data, and insights on data engineering and machine learning projects for real-time collaboration.

- Built on top of Apache Spark, Databricks provides a fast and scalable platform capable of handling complex processes and large databases efficiently.

- Databricks provides robust tools and features for monitoring data pipelines, enabling organizations to monitor their data processes and workflows closely.

Why Migrate from MariaDB to Databricks?

- Simplify Your Data Platform: Databricks offers a unified platform for all your analytics and AI use cases, including data engineering, data science, and machine learning tasks within a single, integrated environment.

- Scale Cost-effectively: Databricks’ Serverless computing allows you to scale your data processing capabilities on demand without the overhead of managing physical servers. This can significantly enhance cost efficiency and offer considerable improvements in price-performance ratios.

- Accelerate Innovation: With Databricks, you can accelerate innovation through advanced data processing, machine learning, and real-time analytics with open-source technologies like Apache Spark and MLflow.

2 Effective Methods to Migrate Data from MariaDB to Databricks

Let’s look into the different methods for an effective MariaDB-Databricks integration.

- Method 1: Using Estuary Flow to Load Data from MariaDB to Databricks

- Method 2: Using CSV Export/Import to Load Data from MariaDB to Databricks

Method 1: Using Estuary Flow to Load Data from MariaDB to Databricks

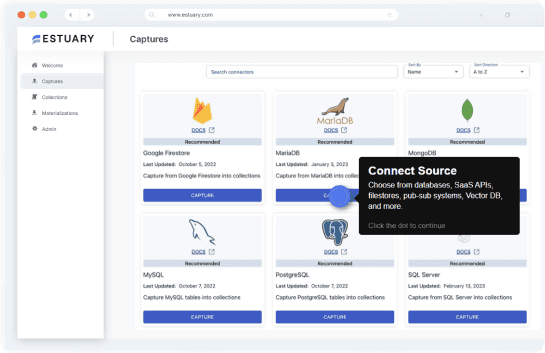

Estuary Flow provides a robust ETL platform for effectively integrating and transforming real-time data. It extracts information from multiple sources and adds the data to Flow collections. The data in Flow collections will then be materialized into a destination.

Estuary offers readily usable connectors to simplify the entire process of moving data from any source to the destination. You do not need to write a single line of code to configure these connectors, making it suitable for users with minimal technical expertise, too.

Here are some of the key features of Estuary Flow:

- Real-time Data Processing: Estuary Flow facilitates real-time data transfer from MariaDB to Databricks. This ensures that downstream applications always have access to the most recent data, enhancing your real-time decision-making capabilities and operational efficiency.

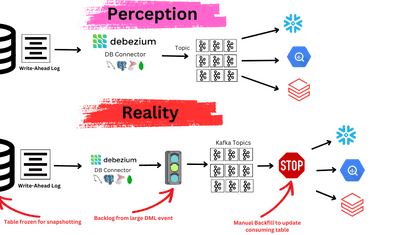

- Change Data Capture: Estuary Flow uses robust log-based CDC algorithms to capture changes at the source effectively. Any modifications in your MariaDB database will be immediately reflected in Databricks without requiring manual intervention, reducing latency and maintaining high data integrity.

- Scalability: Estuary Flow is designed to handle massive data volumes efficiently and can scale up to 7 GB/s. This capability ensures that data-intensive operations can run efficiently without any performance degradation.

The following steps will assist you in migrating your data from MariaDB to Databricks.

Prerequisites

Step 1: Configure MariaDB as the Source

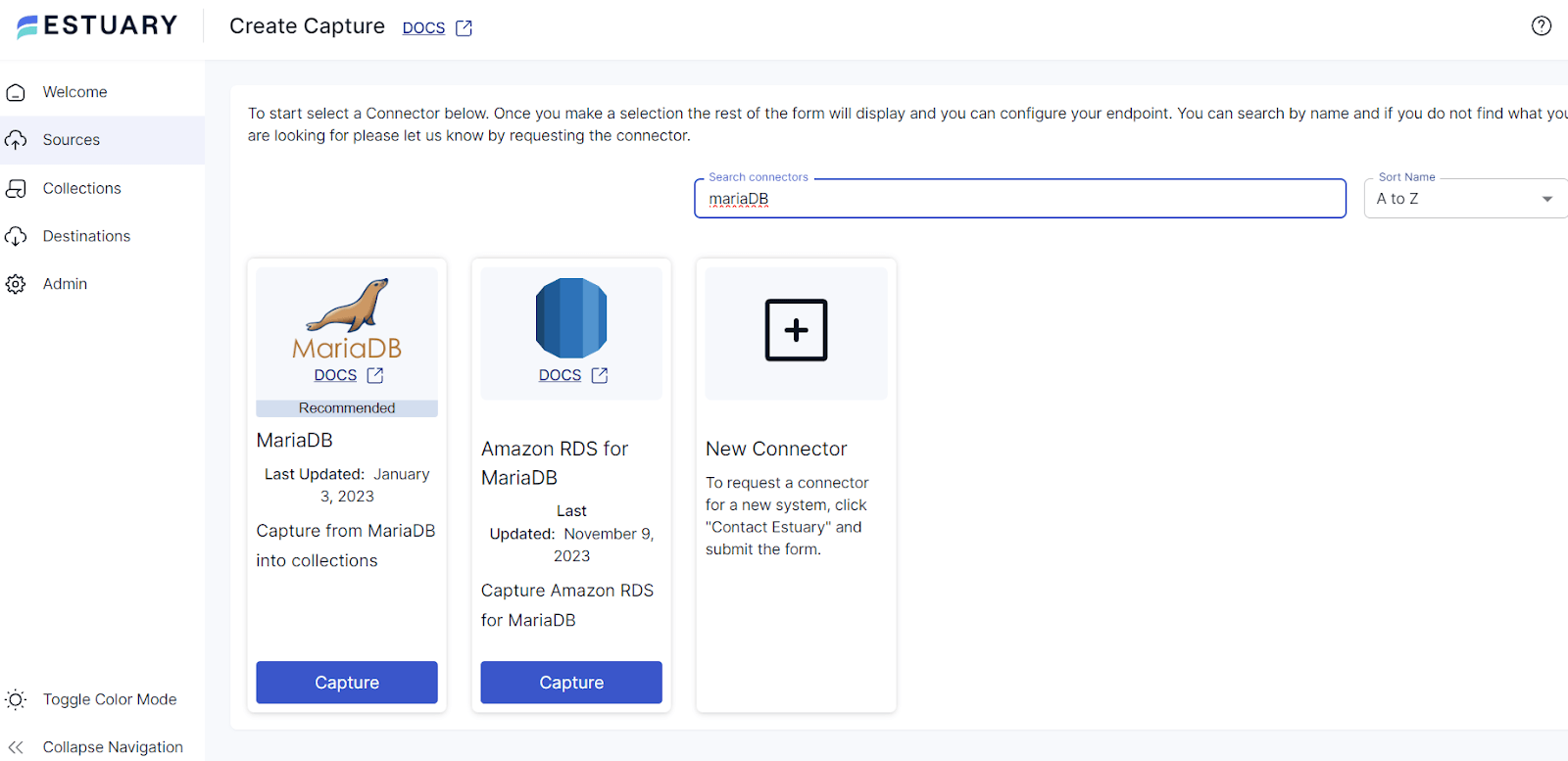

- Sign in to your Estuary account.

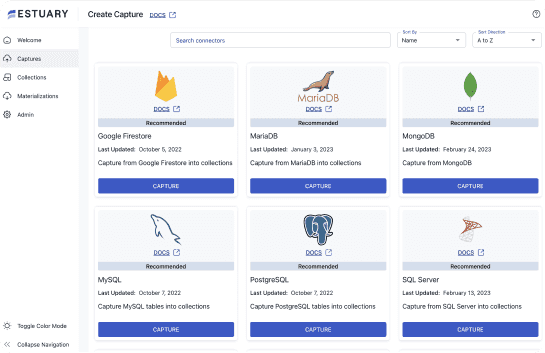

- On the dashboard, click Sources > + NEW CAPTURE.

- In the Search connectors box, type MariaDB. Click the Capture button of the connector when you see it in the search results.

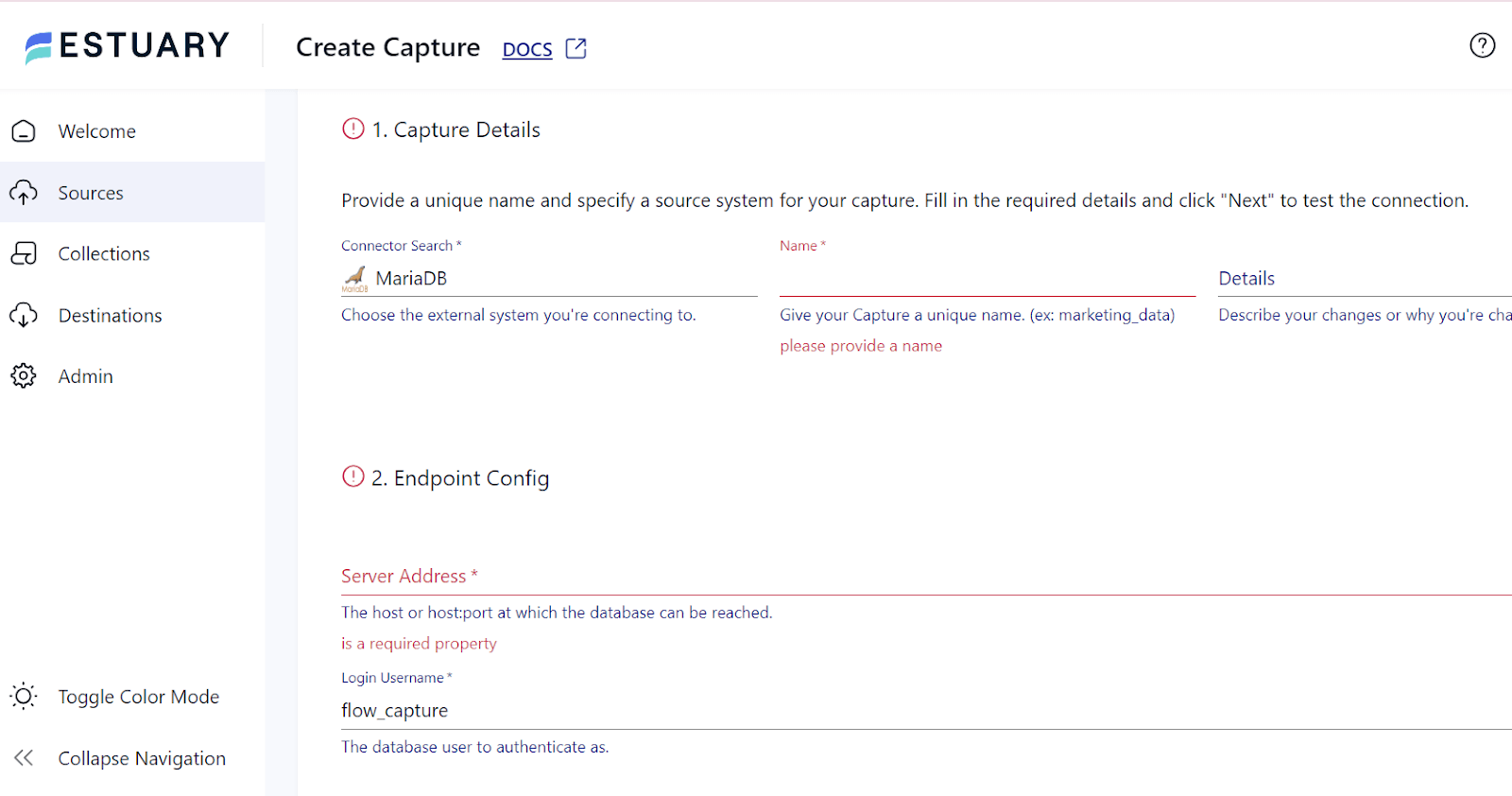

- On the MariaDB connector configuration page, enter a unique Name for your capture. Also, provide other necessary information, such as the Server Address, Login Username, and Login Password.

- Click NEXT > SAVE AND PUBLISH. This CDC connector will capture change events in your MariaDB database via the Binary Log.

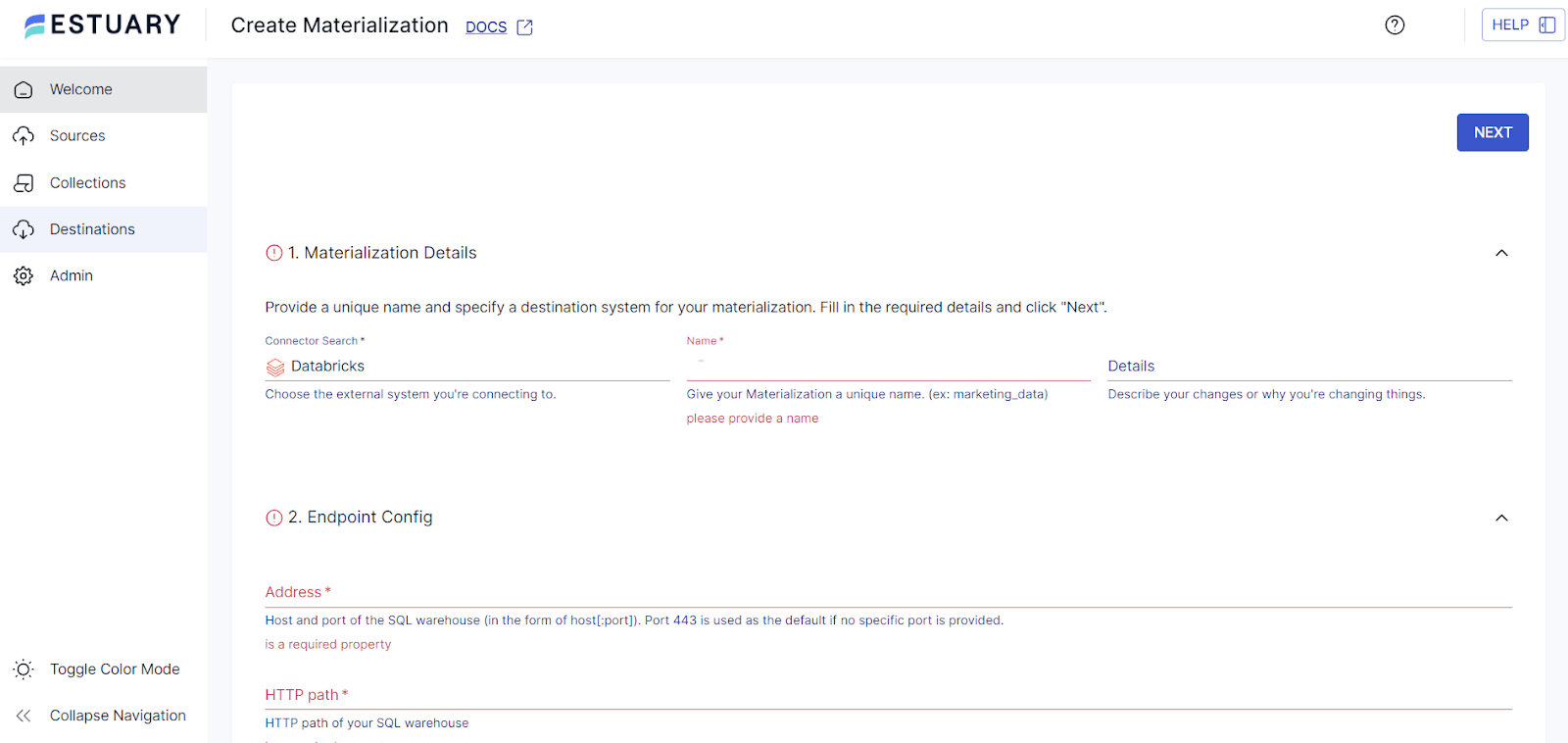

Step 2: Configure Databricks as the Destination

- Following the successful capture, you will see a pop-up window with the capture details. Click on MATERIALIZE COLLECTIONS in this pop-up to proceed with setting up Databricks as the destination end of the integration pipeline.

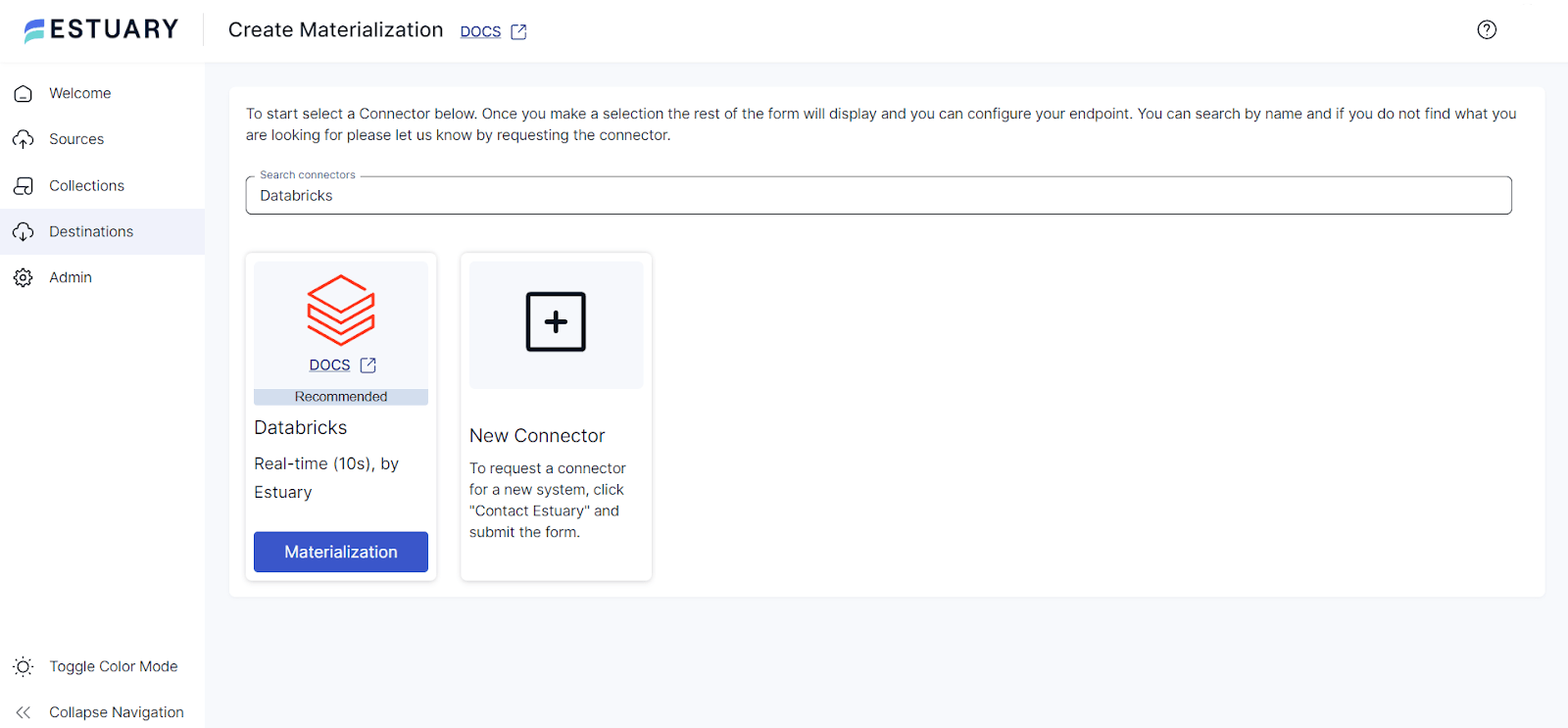

Alternatively, you can set up Databricks as a destination connector by clicking the Destinations option in the left navigation pane of the dashboard. Then, click + NEW MATERIALIZATION.

- On the Create Materialization page, use the Search connectors field to find the Databricks connector. Click the Materialization button of the connector in the search results.

- You will be redirected to the Databricks connector configuration page. Provide the required data in the mandatory fields, including Name, Address, HTTP path, and Catalog Name. For authentication, provide the Personal Access Token data.

- Although the collections you add to your capture will automatically be included in your materialization, you can explicitly link a capture to your materialization using the Source Collections section.

- To connect a capture to your materialization, click the SOURCE FROM CAPTURE button in the Source Collections section.

- Then, click NEXT > SAVE AND PUBLISH to materialize Flow collections of your MariaDB data into Databricks tables.

Method 2: Using CSV Export/Import to Load Data from MariaDB to Databricks

This method involves exporting data from MariaDB as CSV files and then importing the CSV data into Databricks. Here are the 3 easy steps involved in the process:

Step 1: Export Data from MariaDB to CSV

You can export your MariaDB data into CSV format by using the SQL command below:

plaintextSELECT field1, field2, field3 FROM table_name

INTO OUTFILE '/path/to/save/filename.csv'

FIELDS ENCLOSED BY ''

TERMINATED BY ','

ESCAPED BY '"'

LINES TERMINATED BY '\n';This SQL code exports data from specific fields in a table (table_name) to a CSV file (filename.csv). You’ll likely need to adjust the following:

- Replace field1, field2, and field3 with the actual names of the data points you want to extract from the table.

- Update table_name to reflect the real table holding your data.

- Change /path/to/save to the desired location.

- Modify filename.csv to your preferred name.

Step 2: Import CSV into Databricks

In your Databricks account, navigate to the sidebar menu in the UI.

- Select the Data tab from the sidebar menu and click Add Data.

You have two options to add data:

- Drag and drop the files into the UI’s drop zone, or browse and select the files from your local system. The files will be stored in a path similar to the one below:

plaintext /FileStore/tables/<fileName>-<integer>.<fileType>- Then, click the Create Table with UI button to create a new table with specific schema details and import settings.

Step 3: Access and Modify the Data

After uploading the CSV file and creating a table, you can follow these steps to modify the data:

- Select the newly created table and click on the Preview Table to view your data.

- While Databricks attempts to infer the data types of each column automatically, you can manually select the appropriate data type from a list of options.

- Use the left navigation bar to make any desired changes to the data.

- After adjusting your settings, click Create Table to finalize the table configuration.

- You can access the table via the cluster where the CSV file is stored.

Limitations of Using CSV Import/Export to Migrate Data from MariaDB to Databricks

While the CSV export/import method can effectively migrate the data, it has significant limitations, including:

- Lack of Real-Time Integration: CSV export/import doesn’t support real-time synchronization. Any changes to the source data after an export must be manually updated to the destination system. This impacts the efficiency of organizations requiring real-time data analysis and decision-making.

- Time-consuming: Manually exporting and importing CSV files is time-consuming and resource-intensive. Due to the delays involved, this can be particularly inefficient for frequent data transfers.

- Prone to Errors: Manual efforts to migrate the data and the time delays involved increase the chances of data errors and inaccuracies, resulting in reduced data integrity.

Summing It Up

Connecting MariaDB to Databricks can help simplify data manipulation and analysis. To achieve this integration, you can use the manual CSV export/import method or Estuary Flow.

While using CSV files seems straightforward, the method lacks real-time integration and requires repeated manual efforts. On the other hand, Estuary Flow can help overcome these drawbacks with no-code configuration and automation. It eliminates repetitive and resource-intensive tasks for an almost effortless integration process. With Estuary Flow, you can seamlessly migrate your data in just two simple steps:

- Step 1: Connect MariaDB as your source.

- Step 2: Configure Databricks as your destination, initiate real-time data flow

Complete your data integration tasks efficiently while saving considerable time and effort. Create a free Estuary account here to get started!

FAQs

Q1. What is the Databricks Lakehouse platform?

The Databricks Lakehouse Platform is a unified data analytics solution that combines the best features of data lakes and data warehouses. It allows you to store vast amounts of raw data in its native format while providing the robust structure and performance capabilities of a data warehouse.

Q2. MariaDB: Is it free?

Yes, MariaDB Server is free and open-source software operating under the GPLv2 license; it is not affiliated with any commercial organization.

Start streaming your data for free

Build a PipelineAbout the author

Rob Meyer is the VP of Marketing of Estuary. He has worked extensively in marketing and product marketing on database, data integration, API management, and application integration technologies at WS02, Firebolt, Imply, GridGain, Axway, Informatica, and TIBCO.