The increasing amount of data generated and the complexity of modern-day data systems requires a more sophisticated approach to data analysis. With every click, swipe, and interaction, more and more data is created, presenting businesses with the massive challenge of finding meaningful insights from this data. Machine learning statistics is the perfect tool for tackling this challenge.

The power of machine learning statistics lies in its ability to uncover patterns that are hidden in plain sight. By leveraging advanced mathematical models and algorithms, it can identify relationships and connections between seemingly disparate data points.

In this article, we’ll give you an overview of machine learning statistics and their different types. We will also discuss practical applications and important challenges of machine learning statistics in real-time data scenarios.

Reading this article will not only help you learn how machine learning can be adapted to real-time data scenarios but you’ll also know the important aspects of data architecture needed for real-time machine learning.

Machine Learning Statistics: A Powerful Tool For Data Analysis

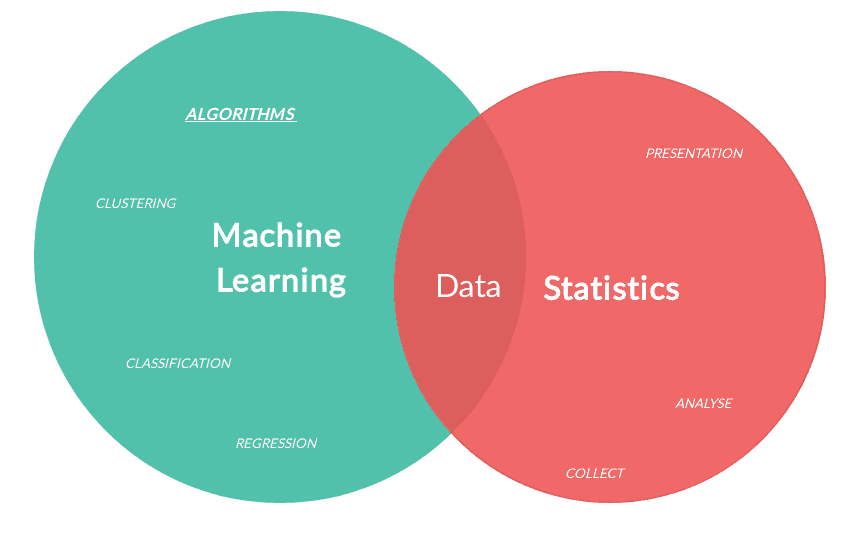

Machine learning statistics has brought about a revolution in the world of data analysis. Combining the power of statistical methods with cutting-edge algorithms allows us to dive deeper into data, analyzing unseen patterns and trends that might go unnoticed with traditional statistical analysis techniques.

But before we get into its intricacies, let’s lay the groundwork by exploring the fundamentals and comparing machine learning and statistics.

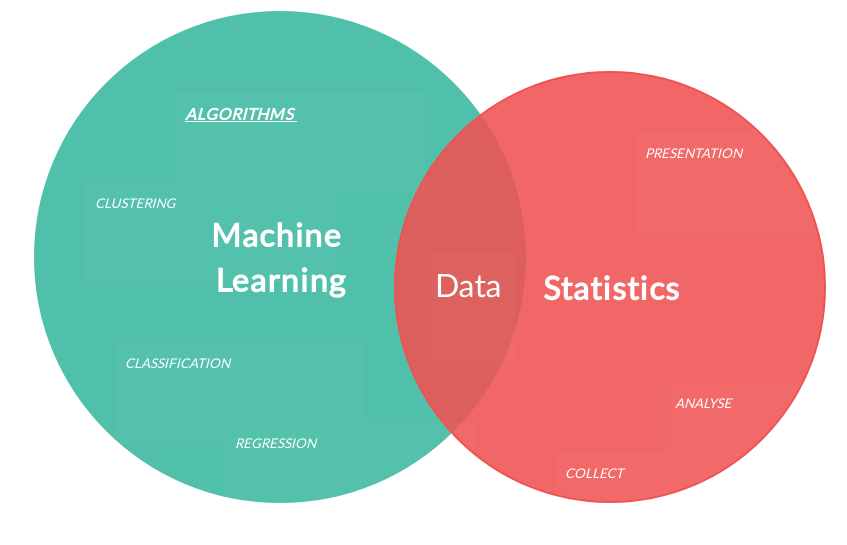

The main goal of machine learning is to train computers to draw inferences and conclusions from data without being explicitly programmed.

Machine learning statistics, on the other hand, is a subfield that focuses on using statistical techniques to create, evaluate, and optimize these learning algorithms. In other words, it combines the best of both worlds, statistics and machine learning, to extract valuable insights from data.

3 Types Of Machine Learning Systems

Equipped with a basic understanding of machine learning, we can now dive deeper into some of the key techniques and methods that make it such a powerful tool for data analysis.

- Regression: One of the most common techniques in machine learning, regression analysis is used to model relationships between input and output variables. This method helps us make predictions and understand the impact of various factors on the outcome.

- Classification: Classification algorithms are used to categorize data into different classes or groups.

- Clustering: Clustering techniques help us identify and group similar data points together based on their features. This is particularly helpful when we want to uncover hidden structures or patterns within large datasets.

2 Types Of Statistics

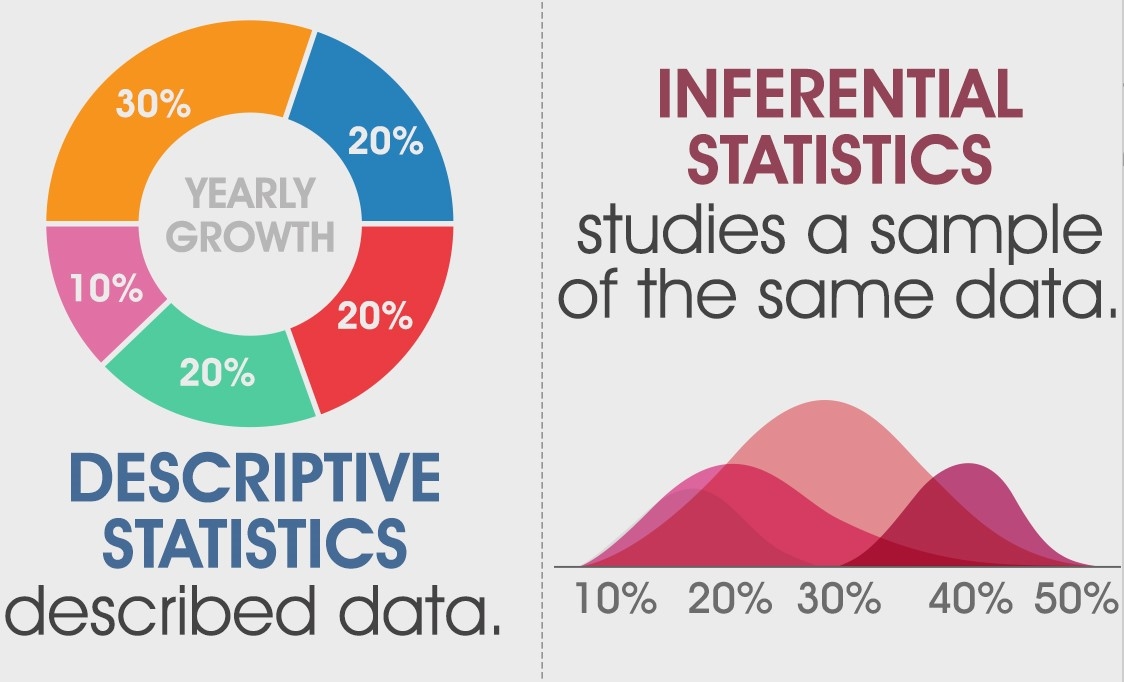

There are 2 main types of statistics: descriptive and inferential. By employing a combination of both, machine learning engineers can analyze complex data sets and make accurate predictions.

Descriptive Statistics

Descriptive statistics serve as a vital component of exploratory data analysis. It allows you to understand the underlying structure and characteristics of a dataset. They help summarize and visualize data and make it easier to identify trends and potential outliers.

There are 2 primary subtypes of descriptive statistics used in data science:

- Measures of Central Tendency: These statistics help determine the central position of a dataset. The most common measures include the mean, median, and mode. By identifying the central point, you can get a better understanding of the dataset’s overall behavior.

- Measures of Dispersion: These statistics describe the spread of data points within a dataset. Common measures of dispersion include range, variance, standard deviation, interquartile range, and distributions like probability distribution and normal distribution. These measures can help you understand the variability of the data needed for selecting the most appropriate machine learning algorithm.

Inferential Statistics

Inferential statistics enable you to make predictions and generalizations about population data based on a sample dataset. They are crucial in determining the effectiveness of machine learning models and understanding the relationships between variables.

There are 3 main subtypes of inferential statistics used for analyzing data:

- Hypothesis Testing: Hypothesis testing allows you to test the validity of a claim or assumption about a population based on sample data and determine whether the observed patterns in the data are due to chance or an actual relationship between variables. Common hypothesis testing methods include t-tests, chi-square tests, and analysis of variance (ANOVA).

- Confidence Intervals: Confidence intervals estimate the range within which a population parameter is likely to fall, based on sample data. They indicate the uncertainty associated with the estimate and can help you understand the precision of your model predictions. By incorporating confidence intervals into their models, you can make more informed decisions and improve the overall performance of machine learning algorithms.

- Regression Modeling: Regression modeling is a unique case as it is commonly described as a type of machine learning approach as well as an inferential statistics technique. It establishes relationships between variables and outcomes and allows you to make predictions based on those relationships.

What Happens When We Have Real-time Data?

While the field of machine learning has been around for several decades, real-time machine learning has been gaining significant traction in recent years with the global machine-learning market expected to grow to USD 226 billion by 2030.

Technological advancements, such as real-time data sources, breaking down data silos, and preserving historical data have made machine learning skills highly relevant in today’s landscape.

Simply put, real-time machine learning is the process of using machine learning models to make autonomous, continuous decisions that impact businesses in real-time.

Real-time machine learning finds its place in mission-critical applications like fraud detection, recommendation systems, dynamic pricing, and loan application approvals. These applications must run “online” and make decisions in real time – sometimes, even milliseconds matter.

But how does this compare to traditional machine learning?

Analytical machine learning or the “offline” kind, is designed for human-in-the-loop decision-making. It feeds into reports, dashboards, and business intelligence tools, and is optimized for large-scale batch processing.

While both approaches have their merits, real-time machine learning offers that extra edge in industries where it’s most beneficial.

Real-Time Machine Learning Architecture

So how does data architecture fit into all this?

Modern data architecture is becoming increasingly important. Nobody likes data sprawl, data silos, or manual processes that hinder developer productivity and innovation.

In fact, according to the latest State of the Data Race survey report, over three-quarters of tech leaders and practitioners consider real-time data a must-have, and almost as many have ML in production. A solid data architecture plays a crucial role in enabling real-time machine learning capabilities.

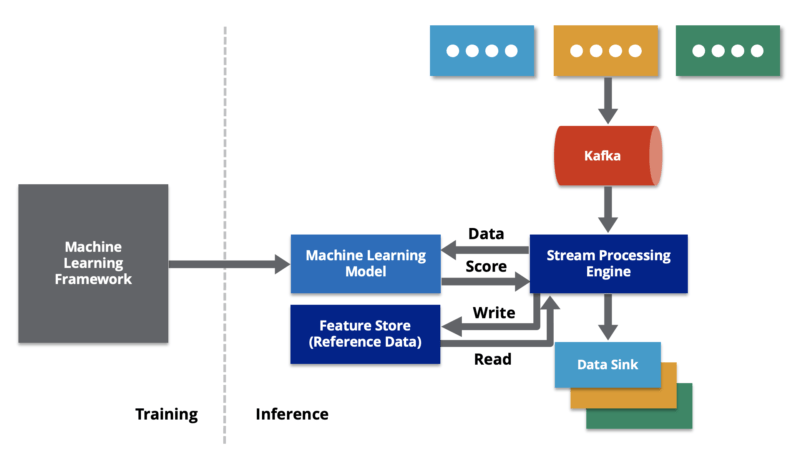

Now let’s take a look at the 3 key elements of real-time data architectures for ML applications.

Event-Driven Architecture

Event-driven architecture is a design approach where a system responds to incoming events like real-time data streams. This type of architecture is perfect for real-time ML because it allows for quick decision-making based on the latest information. With event-driven architecture, your system stays nimble and adapts to changes as they happen.

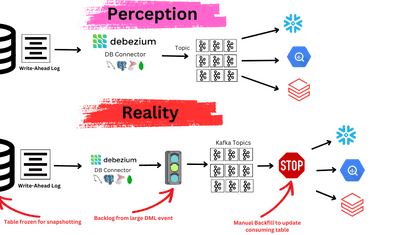

Real-Time Data Pipelines & Transformations

Data pipelines are responsible for moving and processing data from its source to its destination. In real-time ML, these pipelines handle the live data stream and apply necessary transformations to make the data ready for input into the model. This ensures that your ML model has the most relevant and up-to-date information to work with which is crucial for making accurate real-time predictions.

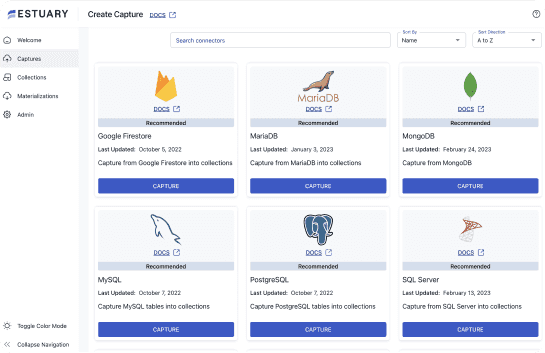

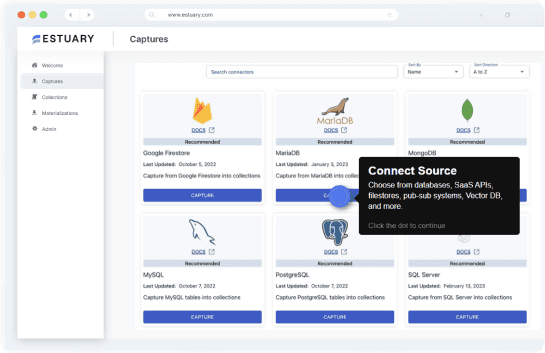

Estuary Flow is an excellent tool for building and managing real-time data pipelines. It can connect to hundreds of data sources and destinations, including databases, APIs, and messaging systems.

Flow's real-time streaming abilities can process large volumes of data in real time, ensuring that Machine Learning models receive the most up-to-date information available. This allows you to make precise predictions and decisions based on the latest data.

Estuary Flow can integrate with many third-party tools and platforms including cloud services and data visualization tools. This enables companies to extend their capabilities and create a fully integrated data processing and analysis system. Its flexible architecture and powerful transformation capabilities allow businesses to create custom transformations that fit their specific requirements.

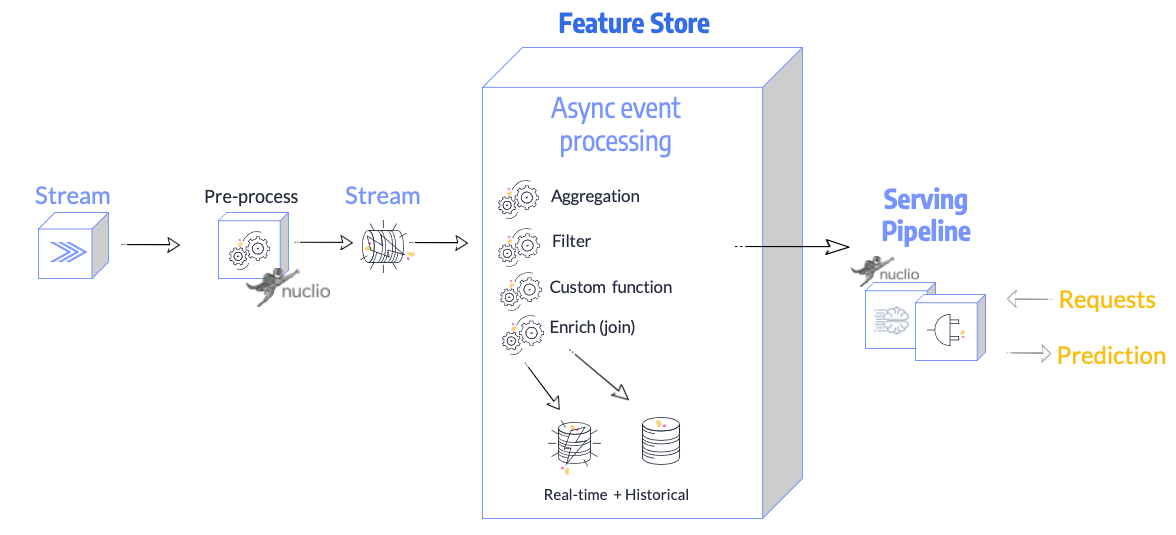

Feature Store & Its Role In Real-Time ML

A feature store is a central repository for storing and managing the data used to train ML models. In real-time ML, a feature store plays a critical role as it needs to be super fast with extremely low latency, often powered by in-memory technologies.

The feature store enables the real-time statistical model to access the latest data and update itself on the fly. This helps them stay current and adapt to new patterns as they emerge and makes your real-time ML system more effective and responsive.

To fully understand the potential of real-time machine learning and its architecture, you should learn the key aspects of adapting machine learning statistics to real-time data. Let’s take a look.

Adapting Machine Learning Statistics To Real-Time Data

Here are 3 techniques that play a crucial role in enhancing the performance of machine learning models in various real-world scenarios.

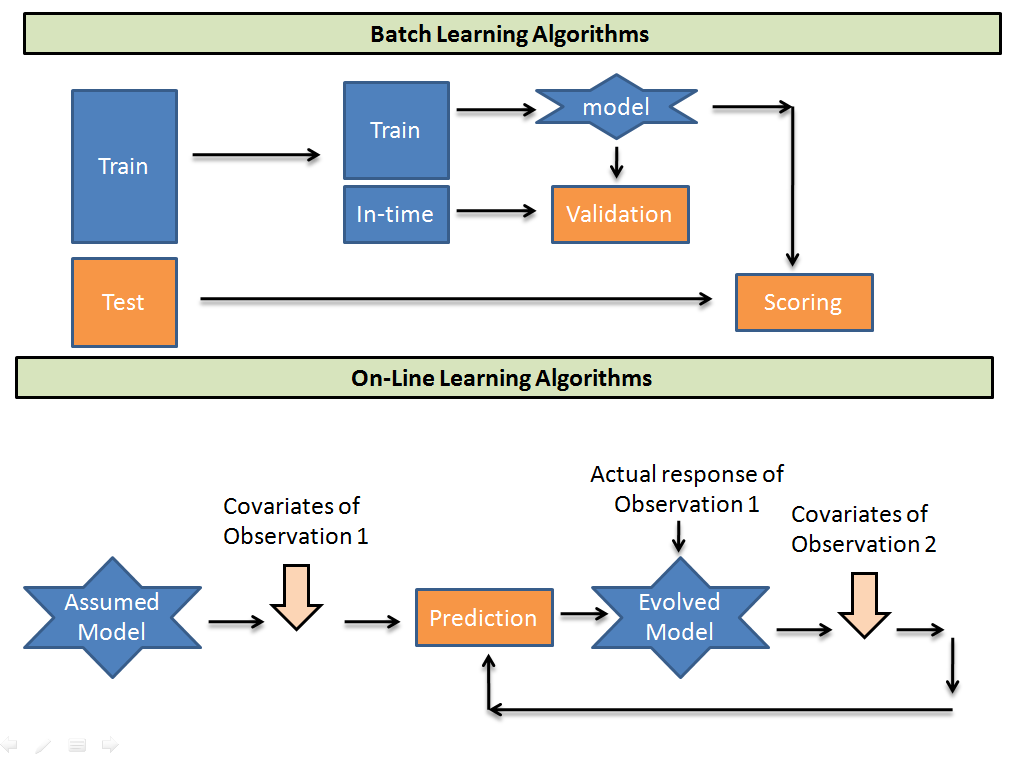

Online Learning

Online learning in machine learning allows models to adapt and learn in real time. This helps the model adapt to real-time changes in the system and improve accuracy. But implementing real-time learning isn’t easy.

You will face numerous challenges like skewed classes, overfitting or underfitting, and potential DDoS attacks. Online learning can also be prone to catastrophic interference which makes it even more challenging.

In terms of technical architecture, online learning requires a different approach. You can't just have multiple instances running like with traditional techniques. Instead, you need a single model that rapidly consumes new data and serves learned parameters through an API. This means it’s only vertically scalable, presenting an engineering challenge.

Recent advancements in deep learning have opened the possibility of training deep networks through data streams. This approach, known as streaming learning, allows models to be updated in real-time as new data becomes available.

Real-Time Feature Extraction & Dimensionality Reduction

These 2 processes are essential for optimizing the performance of machine learning models and ensuring that they can operate effectively in real-time scenarios.

Feature extraction is all about selecting the most relevant and informative aspects of the data.

In real-time scenarios, this means identifying and extracting those features that are critical for making accurate predictions as new data comes in. This is a continuous process as the system must adapt to the ever-changing data landscape.

One of the main challenges in real-time feature extraction is keeping up with the speed of incoming data. To tackle this issue, we need efficient algorithms that can quickly process and extract useful features without compromising accuracy.

This is where techniques like incremental feature selection and online feature extraction come into play, as they allow for continuous updating of the feature set as new data arrives.

On the other hand, dimensionality reduction is the process of simplifying the data representation by removing redundant features or combining correlated features.

This not only helps improve the efficiency of the ML model but also reduces the risk of overfitting.

In real-time scenarios, dimensionality reduction needs to be performed quickly and adaptively. Techniques like online principal component analysis (PCA) and incremental PCA can be used to achieve this goal. These methods allow for the continuous updating of the reduced feature space as new data comes in and ensure that the model stays up-to-date and performs efficiently.

Real-Time Anomaly Detection & Pattern Recognition

In the context of real-time applications, identifying anomalies and recognizing patterns swiftly is of utmost importance. As data arrives continuously, models must adapt rapidly to ensure accurate detection and recognition.

Anomaly detection is identifying unusual data points or patterns that deviate from the norm. Timely detection of these deviations is crucial in preventing system failures, identifying fraud, or addressing security breaches.

For real-time detection, consider employing machine learning models that can be updated quickly as new data flows in. Techniques such as online or streaming learning are well-suited for this purpose, allowing models to adapt on-the-fly and maintain their performance.

Pattern recognition, on the other hand, involves identifying regularities and trends in the data. In real-time scenarios, this means continuously learning from the incoming data to spot patterns that can help in decision-making or predictions.

Adaptive models that can learn continuously are essential for this task as well, enabling the system to recognize emerging patterns and respond accordingly.

A critical factor in both anomaly detection and pattern recognition is the speed and efficiency of feature extraction. In real-time scenarios, it’s important to identify and extract the most relevant features from the incoming data as quickly as possible. This ensures that models are utilizing the latest information to make accurate predictions or detections.

Incremental feature selection and online feature extraction techniques can help achieve this by enabling continuous updates to the feature set as new data arrives.

Building on our discussion of adapting machine learning statistics to real-time data, let’s now see how this approach has been practically applied in real-time data scenarios.

Applications Of Machine Learning Statistics In Real-Time Data Scenarios

Here are some examples of how real-time machine learning statistics improve our businesses and personal lives.

TikTok’s Personalizing Social Media Experiences

TikTok, the super popular app for sharing short videos, uses real-time machine learning stats to give you a tailor-made feed. By looking at your user's viewing history, likes, comments, and shares, TikTok shows you videos that you’ll love, keeping you hooked.

Google Maps Traffic Prediction

We all use Google Maps and their traffic prediction is a lifesaver. By getting real-time data from users, Google’s ML algorithms calculate the number of cars and their speed. By analyzing millions of data points over time, Google identifies patterns and trends to anticipate traffic congestion and recommend alternate routes.

Spam Detection In Gmail

Nobody likes spam emails. But we also don’t like important emails to end up in the spam folder. That’s why Gmail uses advanced machine learning systems to accurately detect spam emails. Algorithms are updated based on threats, tech advancements, and user feedback.

Payoneer’s Real-time Fraud Detection

Digital payment platforms like Payoneer use real-time anomaly detection to spot and stop fraud. Instead of fixing problems after they happen, ML algorithms analyze things like transaction history and location to catch fraud before it occurs.

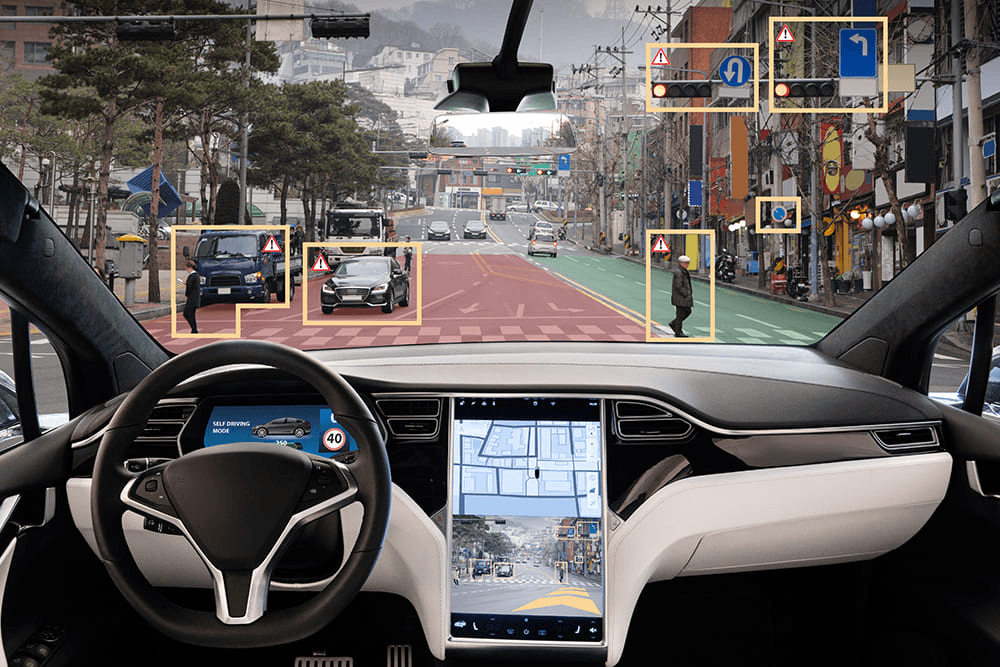

Tesla’s Self-driving Cars

Tesla isn’t just a car manufacturer – they’re a technology company that uses artificial intelligence to make their cars fully autonomous. By using “imitation learning”, their algorithms learn from real drivers’ decisions and reactions. Tesla has an edge over competitors by crowdsourcing data from all Tesla vehicles on the roads.

Their unique neural network, HydraNet, combines individual images into a 3D space and detects pedestrians, vehicles, and other obstacles on the road in real time for accurate decision-making.

Challenges In Machine Learning Statistics For Real-Time Data

As we explore the world of real-time machine learning statistics, you should recognize the challenges that come with it. Let’s see some key obstacles that need to be addressed to ensure the successful implementation and ongoing performance of real-time ML systems.

- Dealing with uncertainty: Real-time data comes in at varying speeds and volumes which can make it difficult to anticipate and manage. Communicate expectations and requirements for real-time data processing clearly.

- Handling errors: Undetected errors can significantly impact real-time ML systems. Identifying the source of errors and addressing them promptly is crucial to maintain system performance and accuracy.

- Ensuring data quality: Poor data quality can cause unreliable predictions and costly mistakes. Real-time ML systems must carefully monitor data streams to detect and address issues like missing data points, incomplete records, and biases.

- Integrating multiple data sources: Real-time data often comes from various sources and formats. Integrating this data seamlessly into ML systems can be a complex task, as seen in applications like Google Maps, which uses data from GPS devices, sensors, weather stations, and more.

- Preventing data leakage: Data leakage can occur when models are exposed to information outside of the intended training dataset. Preventing this in real-time data streams is challenging but necessary to maintain model accuracy and performance.

- Managing data latency: Delays in data production or transmission can lead to outdated or stale data which can negatively affect model accuracy. Real-time ML systems need to account for potential delays and ensure they’re working with the most up-to-date information.

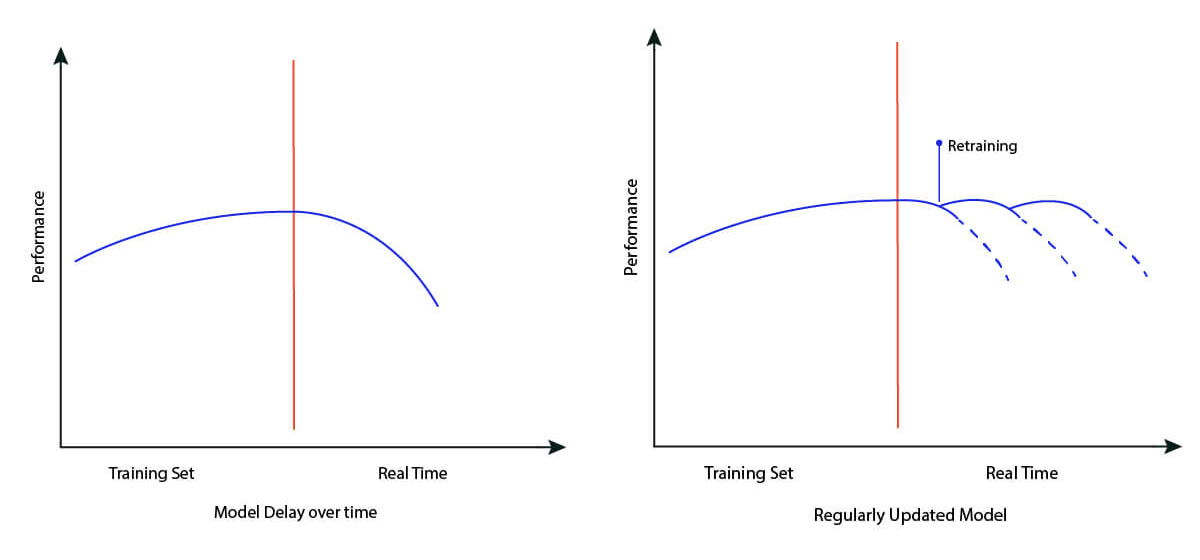

- Implementing stateful retraining: Stateful retraining allows models to retain past learnings while incorporating new data. Setting up the infrastructure for stateful retraining can be a significant challenge but is necessary to handle concept drifts effectively.

- Continual model evaluation: As models learn from new data, evaluate their performance regularly. This requires complex A/B testing systems that assess models on both fresh and old data to ensure that they remain accurate and effective in changing environments.

Conclusion

Machine learning statistics are crucial for uncovering unnoticed patterns and optimizing real-time data-driven solutions. Implementing these techniques can enhance user experiences, detect fraud, and optimize various processes. Despite the many challenges faced by real-time ML, harnessing the power of machine learning in real-time scenarios is achievable with the right tools.

Estuary Flow is a powerful platform that can help you set up scalable real-time data pipelines in no time built on a stateful stream processing foundation. It comes with out-of the box integrations to many popular data sources and destinations, including SaaS apps, databases, and data warehouses. So make the most of machine learning statistics in real-time data processing by utilizing Flow for your real-time data ingestion needs.

Sign up for Estuary Flow today and explore its capabilities to stay ahead of the competition and enhance your business processes. Your first pipeline is free.