What’s a Data Product, Really? Data Usability in Action

Deepen your understanding of what data products actually are by walking through the usability traits of a data product platform. Plus, a straightforward example.

You may or may not already be familiar with “data products.”

The term “data product” is closely associated with “data mesh,” but please don’t let that scare you off. Depending on who you ask, “data mesh” is either just another buzzword, a game-changing approach for dealing with data at scale, or just about anything in between.

Many organizations aren’t large enough to have multiple data teams, so the problem that data mesh addresses (organizational scaling) just isn’t relevant to them. So I’d like to ignore all that for now and just focus on the “data product” part, because I think that’s a far more straightforward framing that’s useful for any size organization.

Below, I'll quickly define the term "data product," and why it's useful. But more importantly, I'll tie this concept into reality. I'll walk through a data platform centered on data products and data usability, and end with a straightforward example data product.

What is a Data Product?

According to Zhamak Dehghani’s Data Mesh book, a Data Product is an asset that adheres to a set of usability characteristics:

- Discoverable

- Addressable

- Understandable

- Trustworthy and truthful

- Natively accessible

- Interoperable and composable

- Valuable on its own

- Secure

What I like most about this is the framing of usability characteristics. It focuses on how to get real value out of your data by making your data usable.

In other words, in order to get the most value out of your data, you need to make it easy for people (and software) to use correctly.

When you put it like that, it sounds almost too obvious. But in reality, it's still way too hard for people to integrate new data. Zhamak’s characteristics of data products are a pretty good breakdown of precisely what it means for data to really be usable. And many of those characteristics are things that your data platform needs to provide.

Most importantly, the benefits of data as a product apply to nearly any size organization, from enterprises to 12-person startups.

Zhamak’s list makes the advantages of a data product obvious from a semi-technical perspective. But we can easily extrapolate into high-level business outcomes, such as…

- Improved data governance.

- Faster time-to-insight.

- Streamlined data workflows,

- Reliable answers to business questions.

…just to name a few

Our company, Estuary, had even fewer than 12 members when we started thinking about data products. As we built our DataOps platform, Flow, we used the characteristics of data usability as a north star.

The advantages of data products were too clear to ignore, and we hadn’t seen many off-the-shelf products that empowered folks to create them.

Before we move on, a bit of context.

At first blush, Flow looks a lot like a standard ETL tool: it helps you move data from point A to point B with seamless UX. On closer examination, you’ll notice that Flow’s conceptual model is different. Put simply, Flow treats the data in motion as a first-class citizen.

Each dataset Flow extracts from a source system, or creates as the result of a transformation, is called a collection.

To better illustrate data products and data usability, I’d like to tell you about how we designed collections to embody characteristics on Zhamak’s list.

Creating Usability With Data Product Metadata and Management

Collections in Flow are, well, data products. Somewhat more narrowly, Flow collections are real-time data lakes that are backed by cloud storage. But they’re not just data lakes, they’re complete data products. As such they have attached metadata, such as:

- A globally unique name, which makes them addressable.

- A schema that defines strictly enforced rules about what documents can be written to it.

- An (optionally separate) schema that defines strictly enforced guarantees to consumers about what documents can be read from it.

- A key that can be extracted from each document and uniquely identifies a domain entity (i.e. if documents represent users, then the key would be the user id). This helps make collections interoperable and composable.

- Precisely defined rules for how to reduce multiple documents having the same key. This means there's consistent and automatic handling of events representing domain entity updates.

- Comprehensive build-time validations to ensure that changes to collection schemas don’t break consumers

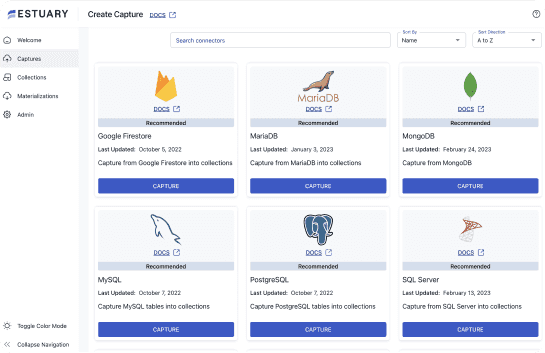

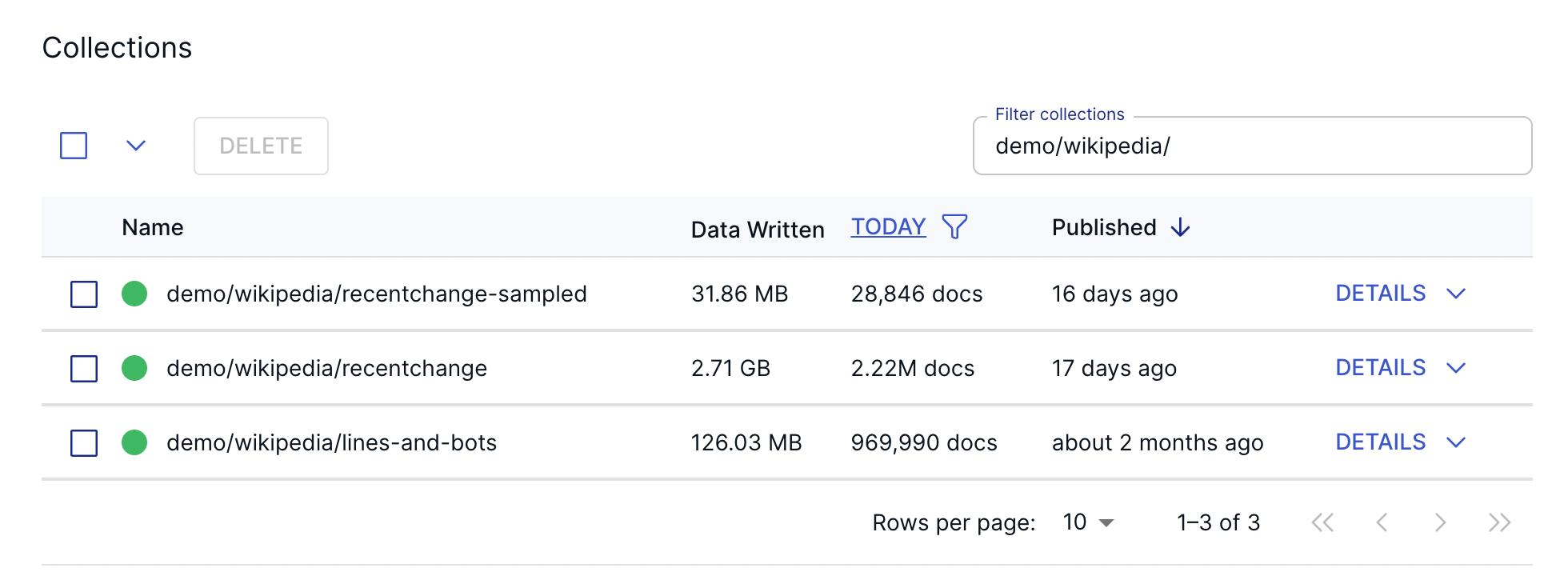

Collections are the main entity that’s managed in the Flow platform. Most collections are created by capturing data from some external system, but you can also create collections by transforming data from other collections. The Flow dashboard has a Collections page, where you can directly search all the collections you have access to.

There’s an authorization system that allows you both fine-grained and coarse-grained access policies for humans and software. Between the dashboard and authorization capabilities, data product management becomes much easier.

So we’ve taken care of discoverable, addressable, and a good chunk of trustworthy and truthful right there.

Making a Data Product Natively Accessible

But in order to be a data product, it has to be natively accessible. This can mean different things to different people, but we can sum it up by saying:

You shouldn’t have to make any major changes to your existing work in order to consume this data product

Close your eyes and imagine how you’d want to go about incorporating a new dataset into whatever data project you’re working on right now. Not many of you imagined using a new and complex API from some startup, huh? Flow has APIs for reading data from collections, but those are generally only used by our own application. The main way that people consume their Flow collections is through materialization into whichever database, storage bucket, spreadsheet, etcetera they’re already using.

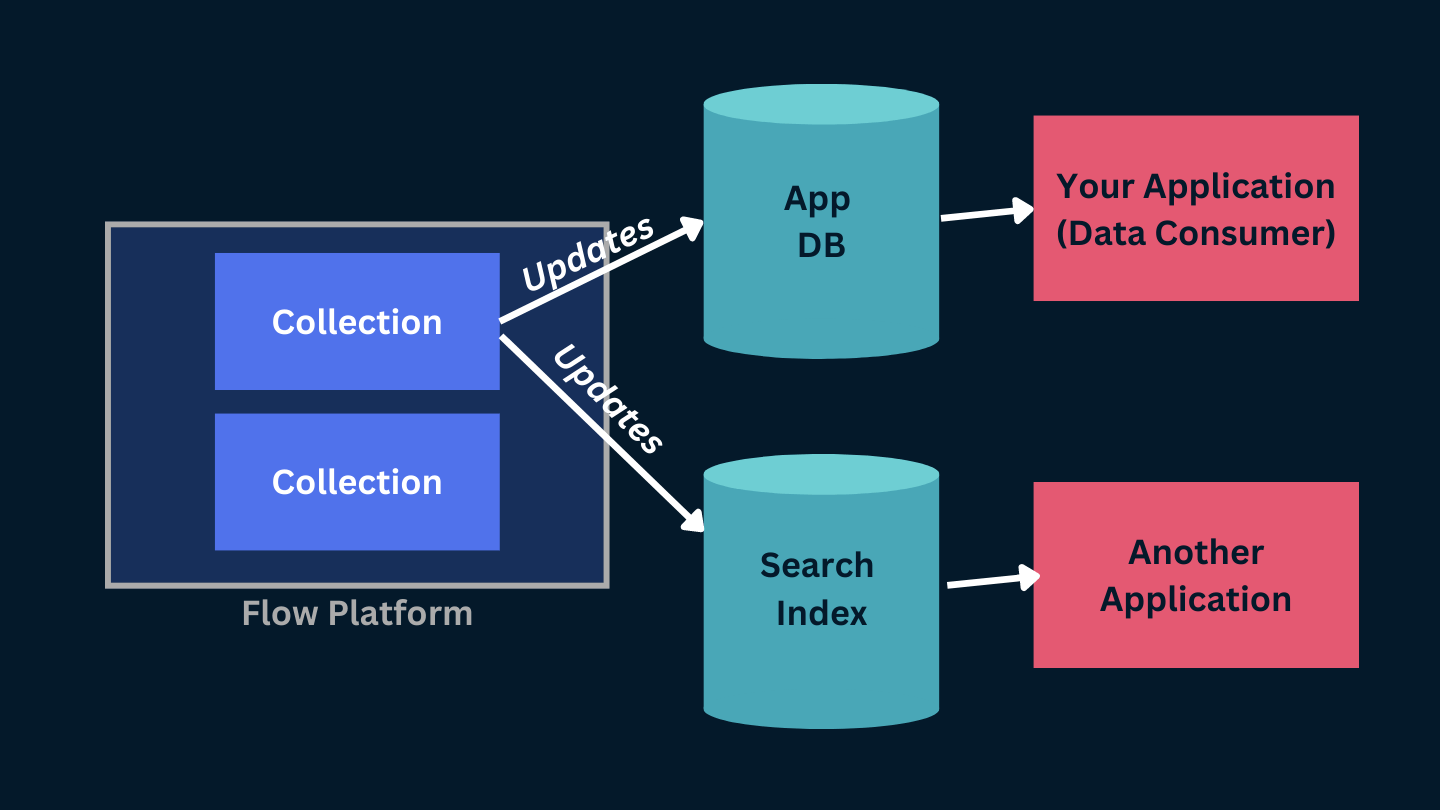

Materialization is the process of taking the collection data and pushing it out to some other system. Flow has a growing set of materialization connectors that can deliver data products to wherever they’re needed. This includes databases, data warehouses, data lakes, search indices, pub-sub, webhooks, and even Google Sheets.

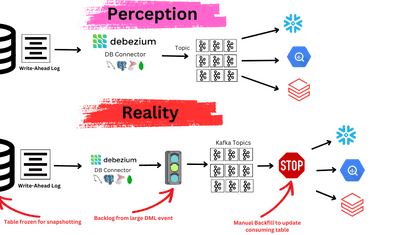

It’s not enough to deliver a data product once, though. Data changes, and we need to account for this fact if we want to make a data product that’s natively accessible.

Updates are the hardest part of all of this because you can’t require that your data consumers have downtime whenever a data set is updated. So for example, you couldn’t drop and re-create tables in the destination whenever the source data changes, because doing so would introduce downtime for whatever is reading from those tables.

Flow materializations deliver continuous updates to the destination. For example, if you materialize a collection to a Postgres table, rows will be inserted or updated as data is written to the source collection.

The corollary to this is that a data product is still the same data product, regardless of how or where you consume it. When we consume data, we depend on some very specific representation of it, like Parquet files in a cloud storage bucket, or tables in a Postgres server. This representation matters to the people and software that are consuming the data, and use cases impose constraints on the acceptable representation.

But let's not confuse the product and the packaging! The product is the data. The representation is just the packaging. A beer is a beer, whether you buy it in a can, a pint glass, or a keg. Consumers of beer have known this for ages, but this state of affairs has eluded consumers of data for too long.

A Real-World Data Product Example

Let’s consider a dataset from Wikipedia. Wikipedia offers lots of data for free, which you can access using their APIs. These APIs are wonderful, but of course, there can be a big difference between an API and a data product.

So we’ve created several example data products from this API by capturing the real-time stream of events corresponding to edits of Wikipedia pages.

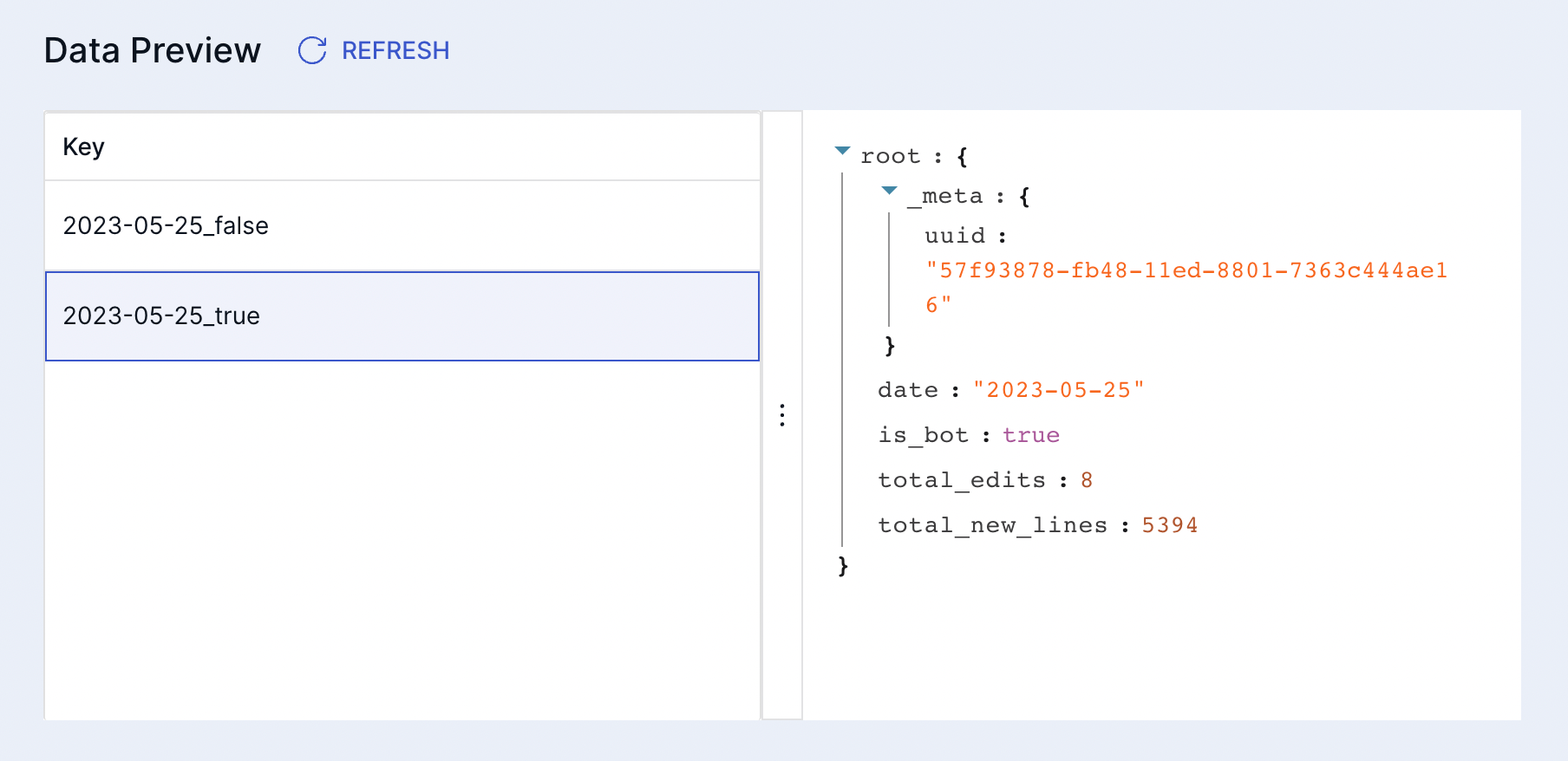

For example, the lines-and-bots collection is a data product that aggregates all the Wikipedia edits by day and whether the edit was made by a human user or a bot. It sums the total number of edited lines for each, so you can get an idea of whether humans or bots are making more edits on a given day.

We provide every user read-only access to these data products (so don’t get thrown off by that login screen — connect with your GitHub or GMail to have a look!).

The data products can then be leveraged in the external system of your choice. For example, we’ve created this materialization into a Google Sheet. You can check out the actual spreadsheet here, and if you watch closely you’ll see the numbers there update every few seconds.

Conclusion

Thinking about data as a product is a useful framing to help organizations of any size get the most value out of their data. There’s a lot more that goes into a data product than just the data itself. While some of the characteristics of a great data product depend on the humans who created it, a good data platform ought to get you most of the way there.

Regardless of whether you use Flow or another data platform, I hope that you’ll find these ideas useful. If you want to give Flow a try, we have a free tier with no credit card required. You can connect with our team on Slack, GitHub, and LinkedIn.