Data integration has emerged as the linchpin of success for organizations across industries. In an era where decisions are driven by insights derived from data, having a comprehensive data integration strategy is a mission-critical necessity that separates industry leaders from laggards.

However, formulating and implementing a seamless data integration strategy can often be a complex undertaking. Enterprises grapple with many challenges, from dealing with huge amounts of data from diverse sources and maintaining data security to abiding by regulatory requirements.

Today’s guide will equip you with effective solutions and an understanding of data integration. We will examine the basics of data integration and its importance and discuss 11 strategies you can use for successful data integration.

By the time you’re done reading this 10-minute guide, you will know how to formulate and implement a robust data integration strategy for your organization.

What Is Data Integration?

Data integration is a fundamental process that consolidates data from diverse sources to deliver a unified view and generate business value from the data. It involves combining technical and business processes to convert the integrated data into meaningful and valuable information.

5 Reasons Why You Need Data Integration

Here are the key reasons why data integration is needed for your business:

- Cost reduction: Data integration can save costs by eliminating manual data handling and reducing errors. It provides an efficient, error-free mechanism for data governance and management.

- Real-time insights: Data integration provides a continuous update of data for real-time insights. This capability helps you respond promptly to changes and ensures agility in business operations.

- Better decision-making: Data integration provides a comprehensive view of business information for data-driven decision-making. It ensures that all decisions are based on accurate, up-to-date, and complete data.

- Increased efficiency and productivity: The automation of data gathering and consolidation saves time and reduces the risk of errors. This increase in operational efficiency directly translates into enhanced productivity.

- Enhanced collaboration: By allowing data sharing across various departments within an organization, data integration fosters improved collaboration. It ensures that all teams have access to the same, consistent data, promoting a more collaborative work environment.

Now that we’ve covered the basics of data integration, let’s see how you can use different strategies for optimal results.

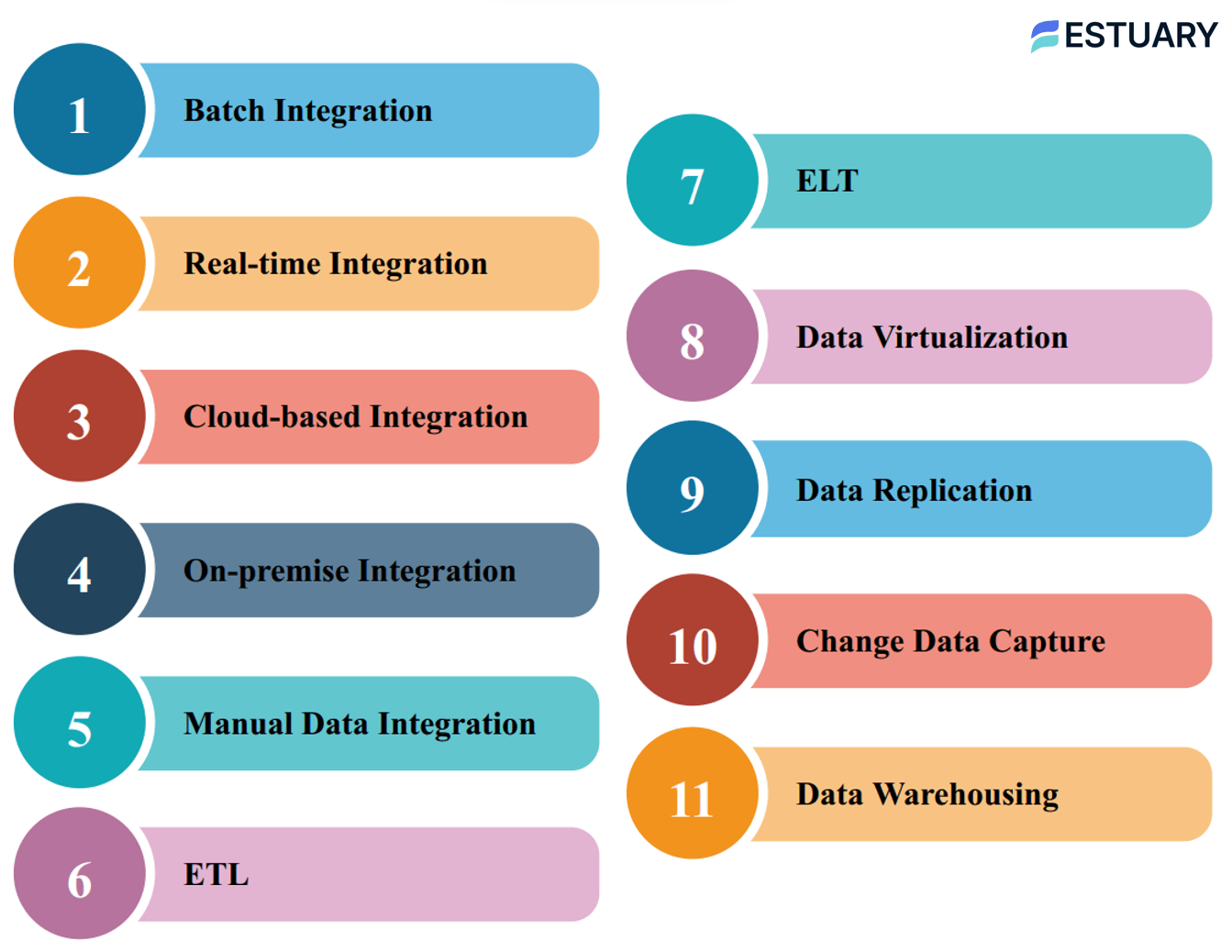

11 Core Data Integration Strategies & Techniques For Improved Business Intelligence

Here are 11 core data integration strategies and techniques that you can implement.

Batch Integration

Batch integration is the strategy of handling and integrating data where large volumes of data are collected over a specific period and processed all at once. Instead of processing data in real-time as it arrives, batch integration waits for a batch of data to accumulate before processing it together. This approach is ideal when immediate data updates are not required for the operations performed.

In batch integration, data from various sources is collected and stored in a temporary storage or staging area, often referred to as a batch queue. The data is accumulated in this queue until a predetermined batch size or a specific time interval is reached. Once the batch criteria are met, the entire batch is processed together.

Batch integration allows for efficient processing and reduces the complexity and cost compared to real-time integration methods. Since the data processing is done in bulk, it can be optimized for performance and resource utilization. In legacy workflows, this usually meant working around the demands of small, on-premise servers, but strategically batching data integration is still relevant today. For example, batching write operations to a data warehouse can help you reduce active warehouse time.

However, batch integration is not suitable for scenarios where immediate data updates are required.

Real-Time Integration

Real-time Integration is a method of transferring data from one system to another in real time. As soon as data is created or updated in the source system, it is immediately transferred to the target system. The main advantage of real-time integration is that it provides you with the most up-to-date and current data, ensuring that you have the latest information at your fingertips.

Unlike batch integration, which transfers data in batches at scheduled intervals, real-time integration has a constant flow of data between systems, and any changes or updates are quickly reflected in the target system. This is particularly useful in situations where you need to integrate data with timeliness and accuracy, like financial transactions, inventory management, or monitoring systems.

While real-time integration offers the advantage of immediate data transfer, it can be a bit more complex and costly to implement compared to batch integration. Real-time integration requires specialized infrastructure and technologies to ensure efficient and reliable data transfer. While real-time integration can be engineered to be just as efficient as batch integration (as in Estuary Flow), the continuous nature of real-time integration can require more resources and bandwidth in upstream and downstream systems to handle the constant flow of data.

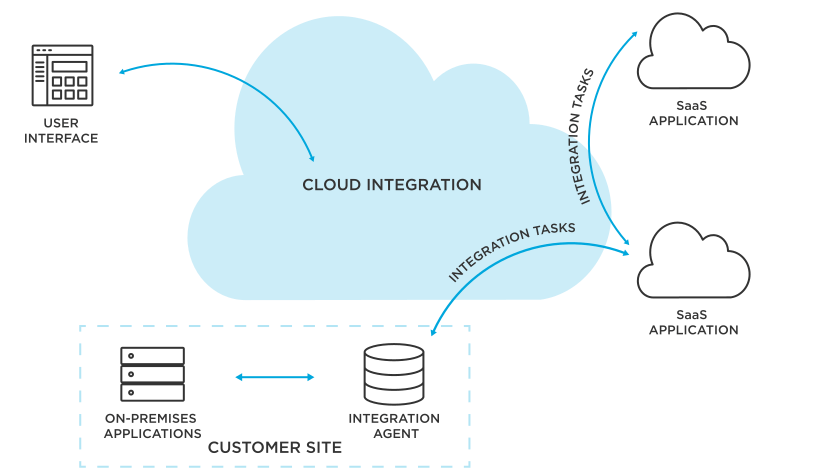

Cloud-Based Integration

Cloud-based Integration uses cloud services for data storage, processing, and integration. One of the key benefits of cloud-based integration is scalability. With cloud services, you can easily scale your data storage up or down and process resources based on your needs. This scalability eliminates the need for you to invest in additional hardware or infrastructure, saving you both time and money.

Cloud services also allow you to access your data anytime, anywhere, and from any device as long as you have an internet connection. They can seamlessly integrate with other cloud-based applications and services for a connected and cohesive workflow.

By using cloud services, you can avoid the upfront costs associated with purchasing and maintaining physical servers or infrastructure. Cloud services are typically offered on a pay-as-you-go or subscription basis and can be well-fit for small and medium-sized businesses.

On-Premise Integration

On-premise Integration means your data is stored and processed on local servers within your organization. This strategy gives you more control over your data since it's not stored on third-party servers. You can manage and maintain your servers and infrastructure according to your specific requirements and perform customized configurations and fine-tuning.

Data security is another benefit of the on-premise integration technique. Since the data is stored within the organization's infrastructure, you can implement and enforce your own security measures and protocols. This includes physical security measures, like restricted access to server rooms, as well as cybersecurity measures, like firewalls, intrusion detection systems, and encryption.

However, it can be more expensive and less flexible than cloud-based integration. If you prefer having direct control over your data and its security, on-premise integration is the way to go.

Manual Data Integration

Manual data integration is as straightforward as it sounds. In this method, you write code to gather, tweak, and merge data from different sources. Since you have complete control over the code, you can customize the integration process according to your specific requirements. This way, you can handle data from different sources and perform any necessary transformations or modifications to ensure compatibility and consistency.

It's a good fit when you're dealing with a small number of data sources and is suitable for situations where more automated integration methods are not feasible or necessary. But remember, this integration technique can be time-consuming, and scaling it up might pose a challenge.

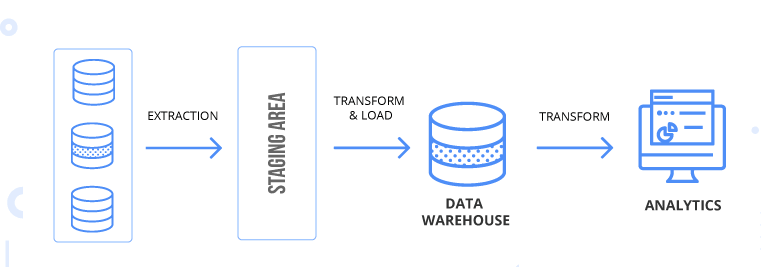

Extract, Transform, Load (ETL)

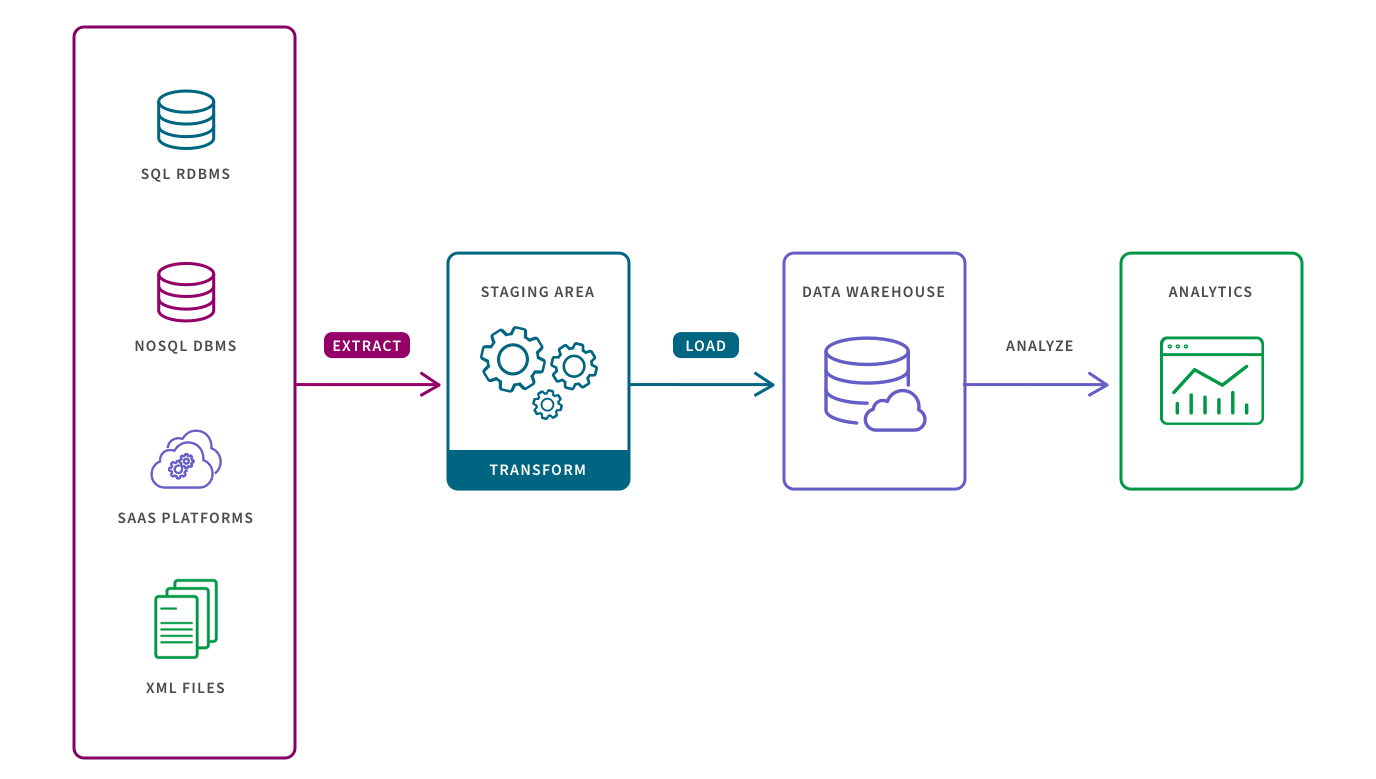

Extract, Transform, Load is a 3-step process where you first pull data from various sources, then transform it into a format that fits your needs, and finally load it into a unified database. By extracting and combining data from multiple sources, you can create a centralized and unified view of your data.

ETL strategy helps improve data quality. During the transformation phase, data can be cleansed, standardized, and validated to ensure accuracy and consistency. This data integration strategy identifies and resolves inconsistent or duplicate data which enhances the overall quality of the integrated dataset.

ETL is a tried-and-tested enterprise data integration strategy that has been widely used for many years. It provides a structured and systematic approach to handling large volumes of data from diverse sources. However, make sure you’re using an efficient and scalable transformation framework: ETL processes can be resource-intensive and time-consuming, especially when dealing with complex data structures or frequent data updates.

Extract, Load, Transform (ELT)

In Extract, Load, Transform (ELT) approach, you extract data from different sources, load it directly into your data warehouse, and then transform it as required. ELT is a great choice when you're dealing with large volumes of data. By loading the data into the warehouse before transforming it, you can leverage the processing power and storage capacity of the warehouse infrastructure.

ELT also provides greater flexibility in terms of data transformation. Since the data is already in the warehouse, you can use the powerful querying and processing capabilities of the warehouse to perform complex transformations directly on the data. This eliminates the need for a separate transformation step before loading the data into the warehouse.

Still, it’s important to proceed with caution when considering a pure ELT data integration pattern: performing too many routine transformations in the data warehouse can prove costly.

Data Virtualization

Data virtualization allows you to access and work with data from different sources without actually moving or duplicating the data. It creates a virtual or logical layer that brings together data from various systems and presents it to users in real time.

In simpler terms, data virtualization acts as a bridge between different data sources, making it easier for users to access and analyze information without the hassle of physically moving or copying the data. This provides a unified view of data and helps you streamline your data management processes.

Data Replication

Data Replication is a straightforward technique used to copy data from one place to another. It's typically used to transfer data between two databases or systems. The main feature of data replication is that it doesn't modify the data itself; it merely duplicates it. This means that the replicated data remains identical to the original, ensuring consistency between the source and destination.

Since data replication operates by copying the data rather than manipulating it, the replication process can be performed quickly and seamlessly. This makes it suitable for scenarios where timely and synchronized data updates are crucial. It also ensures improved data availability as you can access the replicated data in the destination system without relying on the original source.

Change Data Capture (CDC)

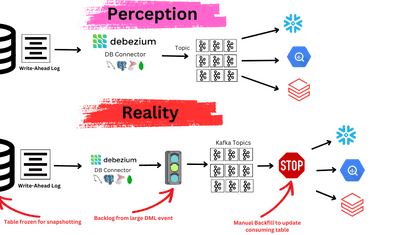

Change Data Capture (CDC) is a technique that identifies and captures changes made in a database and applies them to another data repository. Its primary purpose is to enable real-time data integration by efficiently identifying and transferring only the modified data.

CDC plays an important role in reducing the resources and efforts required to load data into a data warehouse or another target system. Instead of replicating the entire database every time, CDC focuses on capturing and transferring the specific changes that occur. By doing so, it minimizes the amount of data that needs to be processed and transferred for more efficient and timely updates to the target system.

CDC can help in scenarios where data needs to be continuously synchronized across systems, like in data warehousing or maintaining a data lake. With CDC, you can keep your data repositories up-to-date with minimal latency and ensure that users have access to the most recent and accurate information.

Data Warehousing

Data Warehousing is a strategic approach in which data from various applications is consolidated into a centralized repository. The data undergoes a process of copying, cleaning, formatting, and storage within a dedicated structure known as a data warehouse. This allows you to access and query all your data from a single location without impacting the performance of your source applications.

The major goal of data warehousing is to provide you with a comprehensive, accurate, and consistent view of the data which enhances data visibility and understanding across the organization. It serves as a central hub that facilitates efficient data analysis, reporting, and decision-making processes.

Data warehousing supports complex queries and advanced analytics as the data is organized and optimized for efficient retrieval and analysis.

6 Essential Requirements For Modern Data Integration

Deploying a data integration system is not just about merging data from different sources anymore. Modern data integration systems should meet a variety of requirements to effectively support the growing needs of modern businesses.

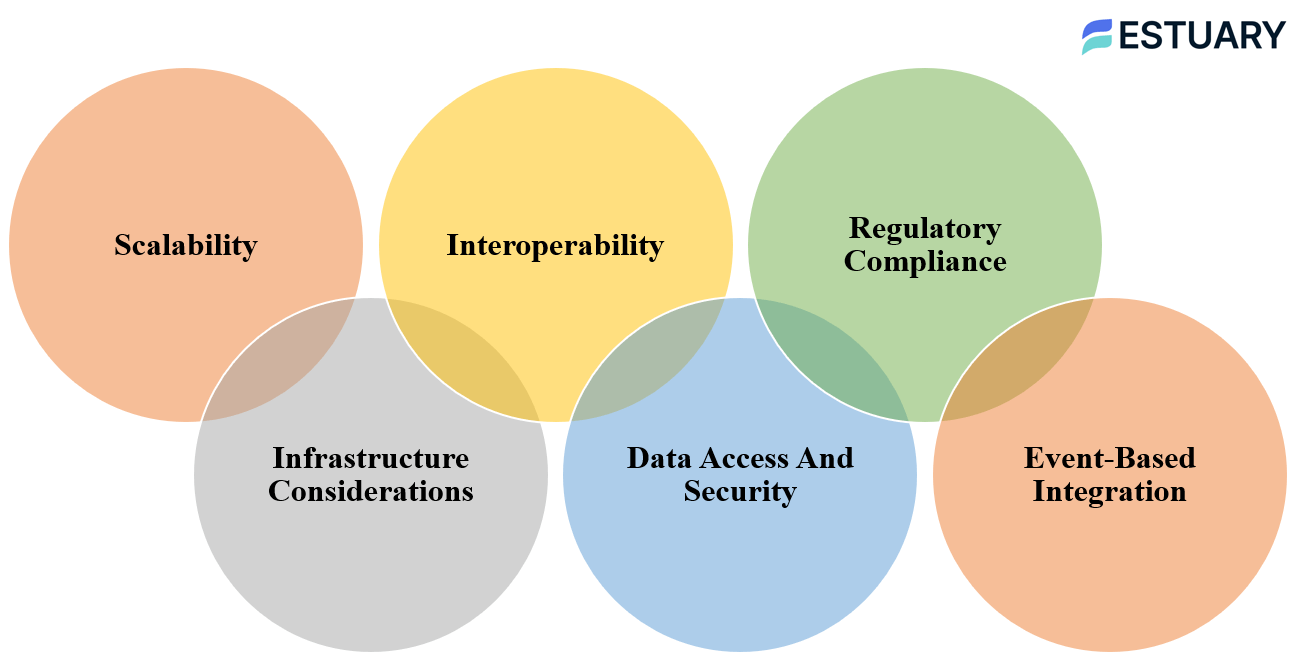

Scalability

As your business grows, so does your data. You should create a data integration strategy that is designed to handle increasing data loads and adapt to changes in your organization's workloads. It should feature automated resource allocation to efficiently manage your integration activities.

Infrastructure Considerations

Your strategy should accommodate a range of deployment options, including on-premises and cloud-based solutions. This flexibility allows you to make decisions based on what's best for a particular solution, rather than being restricted to a single deployment method.

Interoperability

Your data integration technology should integrate with your existing enterprise solutions. It needs to take the origin and destination of your data, the types of storage you employ, and the APIs you use into account.

Data Access & Security

For a successful data integration strategy, you should clearly define the types of data integrated and who has access to them. Security should be a top priority in your data integration system. Your integration strategy should consider potential vulnerabilities in your organization and ensure that data is protected during the integration process.

Regulatory Compliance

Your data integration strategy should not only comply with the regulatory requirements, but it must also keep up with evolving data regulations. The cost of noncompliance can be high so your strategy should adjust to new rules as they're implemented.

Event-Based Integration

Your data integration tools should be capable of responding to business events quickly. This allows for real-time updates and adjustments based on business needs, ensuring that your data integration system is as agile as your business.

How Estuary Can Help With Your Data Integration Needs

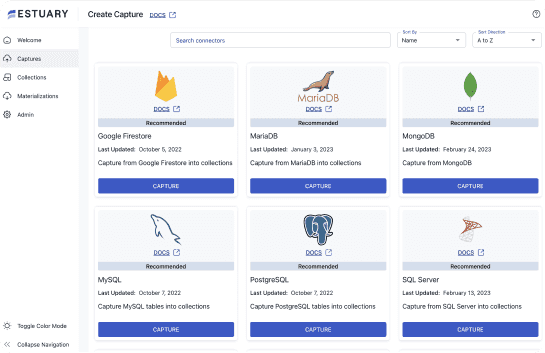

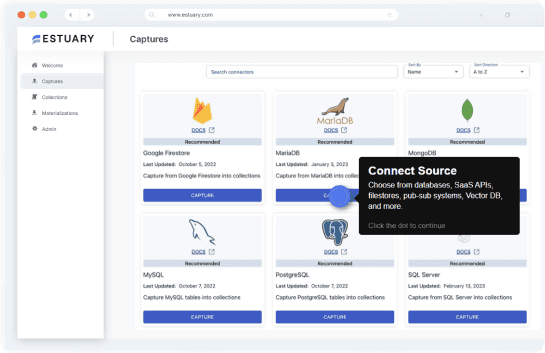

We at Estuary can help you set up scalable and efficient data integration solutions without the need for writing a single line of code. Our no-code DataOps platform, Flow, addresses modern data integration system requirements and applies various integration strategies and techniques quickly and easily.

Here’s how Flow fits into your data integration strategies & techniques:

- Batch integration: Flow efficiently handles batch integration with over 200+ Airbyte connectors and even integrates it with real-time data.

- Real-time integration: You can capture and modify data from a wide range of sources within milliseconds using Flow’s real-time data pipelines.

- Cloud-based integration: Flow leverages the scalability, flexibility, and cost-effectiveness of cloud services, making your data accessible anytime, anywhere, and from any device.

- Streaming ETL: With Flow, you can develop streaming ETL pipelines. It efficiently pulls data from various sources, transforms it to your needs, and loads it into data storage.

- Data Replication & CDC: Flow supports both data replication and Change Data Capture (CDC) from databases. With it, you can move entire databases or efficiently capture changes made in your database.

- Data Warehousing: Flow streamlines data warehousing, allowing for the storage of data from different applications in one common data warehouse that offers a complete, accurate, and consistent view of your data.

Estuary Flow & Your Data Integration Requirements

Here’s how Estuary Flow can fulfill your data integration requirements.

- Scalability: Thanks to its automated resource allocation, Flow efficiently manages your increasing data loads, ensuring your data integration activities run smoothly, no matter how much data you're dealing with.

- Infrastructure considerations: Flow is deployed in the cloud, and you can choose whether to store your data in your own Google Cloud Storage or Amazon S3 bucket. Rather self-host? Contact us and let us know! You’ll be notified when we add support for self-hosting.

- Interoperability: It is built to mesh seamlessly with your existing enterprise solutions using connectors. With hundreds of connectors available, for everything ranging from SQL databases to SaaS solutions, your data integration needs are well covered with Flow. You can even request a new connector if you don’t find what you need.

- Data access & security: Flow lets you set up user roles and permissions to ensure that only authorized personnel have access to sensitive information. Plus, Flow keeps your data secure with strong encryption so you don’t have to worry about it falling into the wrong hands.

- Regulatory compliance: It provides granular control over data integration and management, allowing you to adapt swiftly to new regulations and requirements.

- Event-based integration: Flow is built on an event-driven architecture that helps you get real-time data updates and make adjustments as needed.

Conclusion

Data integration is key for organizations to efficiently use data. To conquer the challenges of data integration, organizations must arm themselves with a comprehensive arsenal of strategies and techniques.

But it can be tough to work through the details of a data integration strategy. Knowing the strategies is one thing but having the right tools is just as vital. That's where Estuary Flow comes in. Whether you are looking at batch integration, cloud-based solutions, or even using your data in cutting-edge AI and ML applications, Flow seamlessly dovetails into your data integration matrix.

Don’t just take our word for it. We would love for you to experience the sheer versatility and prowess of Estuary Flow. Give it a spin by signing up for free. If you are not quite sure how Flow can help you, contact us today.