Connector stories: Apache Kakfa

Apache Kafka is an extremely popular open-source event streaming platform. We talk to Estuary developer Alex about his process and the insights he gained building the Kafka connector.

About connector stories

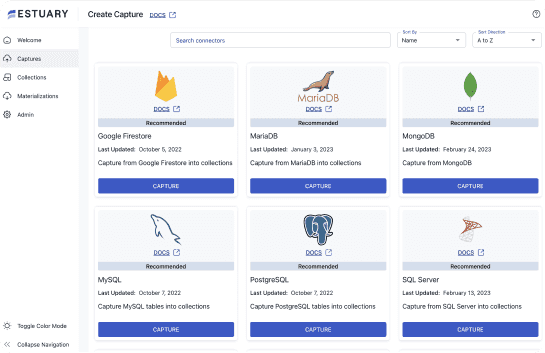

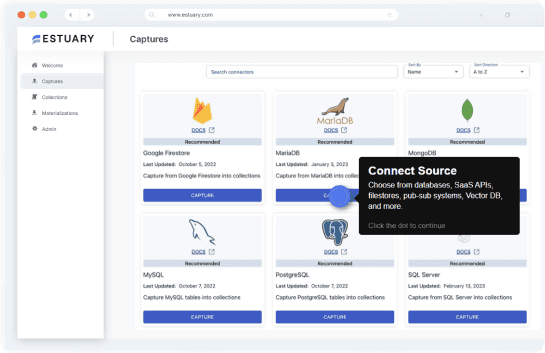

Estuary maintains a repository of open-source data connectors. These connectors are dual-licensed under Apache 2.0 and MIT and follow an open-source standard. You can read more about our philosophy on connectors here.

Though we encourage community developers to contribute in any way they see fit, the connectors we find most critical for integrating the modern data stack are built start to finish by Estuary developers. In this series, we talk to those developers about their process and the insights they’ve gained.

Introducing Estuary’s connector for Apache Kafka

Apache Kafka is an extremely popular open-source event streaming platform. It’s a powerful and scalable event bus that can be used with a huge variety of client systems. Kafka provides the real-time data foundation for many enterprises’ custom solutions. Its unopinionated nature makes it adaptable but sometimes challenging to implement.

Senior software engineer Alex built the Kafka connector immediately after he joined the Estuary team in July. Alex has a background in application development, and 12 years of industry experience. Here’s his take on the connector:

Q: Why did Estuary prioritize a Kafka connector? What new capabilities does it provide?

A: Today, Kafka is often the primary way that organizations produce and store real-time data. This makes it a natural fit as a data source for Flow. Our connector allows us to pull the data from Kafka and send it to systems that can’t read directly from Kafka or understand the raw event data.

Q: Kafka itself is a message bus that should, in theory, be able to act as an organization’s data integration backbone. What additional possibilities does the Estuary connector enable?

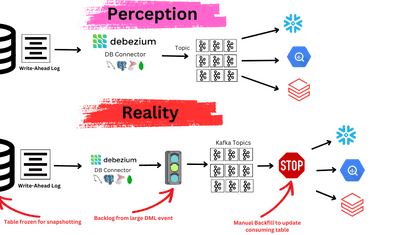

A: In theory, it does exactly that. Once an organization has gone all-in with Kafka, any system can read or write to the central Kafka cluster directly. In practice, this requires that every system be intimately aware of Kafka. Each system needs to know how to connect to Kafka, read the data it cares about, and transform it into a format that is useful for that system. This requires custom application development work which is often fairly duplicative and error-prone. These bespoke ETL systems often turn real-time data in Kafka into batch jobs, introducing unnecessary lag into the data stream.

Our connector streamlines the process of reading data out of Kafka and exporting it to a variety of systems in real time. This gives systems access to that data in just a few lines of configuration, rather than custom development work. Flow becomes the bridge between Kafka and each system, without requiring a direct knowledge of Kafka. Data lands in Snowflake or PostgreSQL, with the applications using those databases unchanged.

Q: What are a few example use-cases you envision this connector being used for?

A: The first thing that comes to mind is Event Sourcing. Kafka can act as an event store, while Flow can easily produce Read Models derived from that event data. Applications read from their local Read Model data stores and publish events back to Kafka. Flow continuously aggregates events and updates the Read Models used by the entire system. This takes advantage of the strengths of both an event log like Kafka and relational databases.

The other easy win is for analytics. Event data isn’t necessarily the easiest format for BI tools to query. Flow can aggregate data from Kafka and materialize it directly into a Snowflake or PostgreSQL table to be used by your favorite BI tool. As new events are produced, the reports update in real time.

Q: Can you explain — on a high level — the approach you took to building it?

A: Estuary has embraced open source from the beginning. Our connectors are no different. We’ve been building our connectors to the same open protocol as Airbyte and others. Within this ecosystem, we’ve been focused on contributing connectors that can benefit from running in a continuous, low latency environment.

At a finer-grain level, Kafka’s mental model is pretty compatible with Flow’s mental model. Both systems provide similar document-oriented streams of data, flexible messaging guarantees, segmentation of data streams, and partitioning within a single stream. Kafka topics can be re-read from a provided offset, simplifying how we restart a capture task. We are utilizing our proposed Range extension to the connector spec to allow distributing reads across many Flow consumers to handle high volume Topics.

Q: Any big surprises while you were building this connector?

A: I’d heard Kafka’s reputation for being difficult to run, but I hadn’t quite internalized that when I started my work. Now I know why hosting for Kafka is so expensive. The most basic of cluster configurations was fairly straightforward, but as soon as I started changing the cluster configuration I started getting some pretty cryptic errors, or worse, none at all. User experience is an important thing to keep in mind, even with developer tools. Investing in this from the start can make a world of difference.

Q: Based on this project, do you have advice for other engineers who want to integrate Kafka with other systems?

A: If Kafka is the best tool for your job, use it — it’s great for a lot of things. That said, if it’s not the right tool for you, but your data lives in Kafka, try to figure out how to decouple your application from it. Then use the right tool with the right data model.

Interested in the connector code? You can find it here.

You can also try the Estuary Flow platform for free!